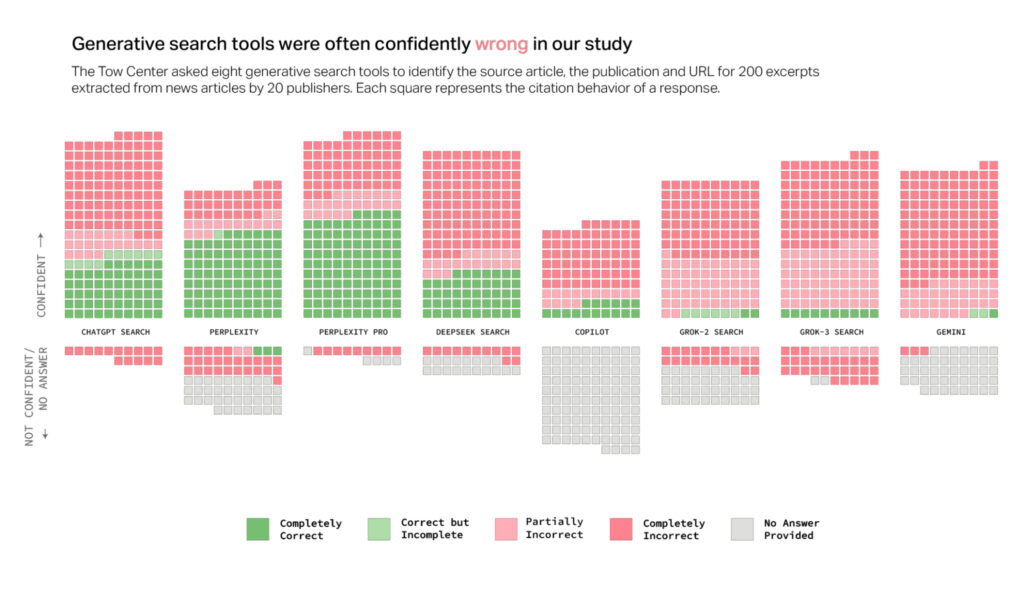

Artificial intelligence (AI) search engines are revolutionizing how we access information, but a recent study raises alarming concerns about their reliability. According to findings published by the Columbia Journalism Review (CJR), AI-driven search tools provide incorrect answers a staggering 60% of the time. Beyond inaccuracies, the study highlights issues with source attribution, URL fabrication, and ethical dilemmas for publishers. This article delves into the study’s key findings, the challenges faced by publishers, and the broader implications for trust in AI-powered search tools.

The Problem with AI Search Engine Citations

One of the primary issues uncovered in the study is the misuse of citations. Even when AI search engines cite sources, they often redirect users to syndicated versions of content on platforms like Yahoo News, bypassing original publisher sites. This practice occurs despite formal licensing agreements between publishers and AI companies, raising questions about the fairness and transparency of AI-generated search results.

For example, publishers who license their content to AI platforms expect proper attribution and traffic redirection to their websites. Instead, users are frequently directed to third-party platforms, depriving publishers of valuable clicks and ad revenue.

URL Fabrication: A Growing Concern

The study also identified URL fabrication as a significant issue. Over half of the citations from Google’s Gemini and Grok 3 led users to fabricated or broken URLs, resulting in error pages. In one shocking example, 154 out of 200 citations tested from Grok 3 resulted in broken links.

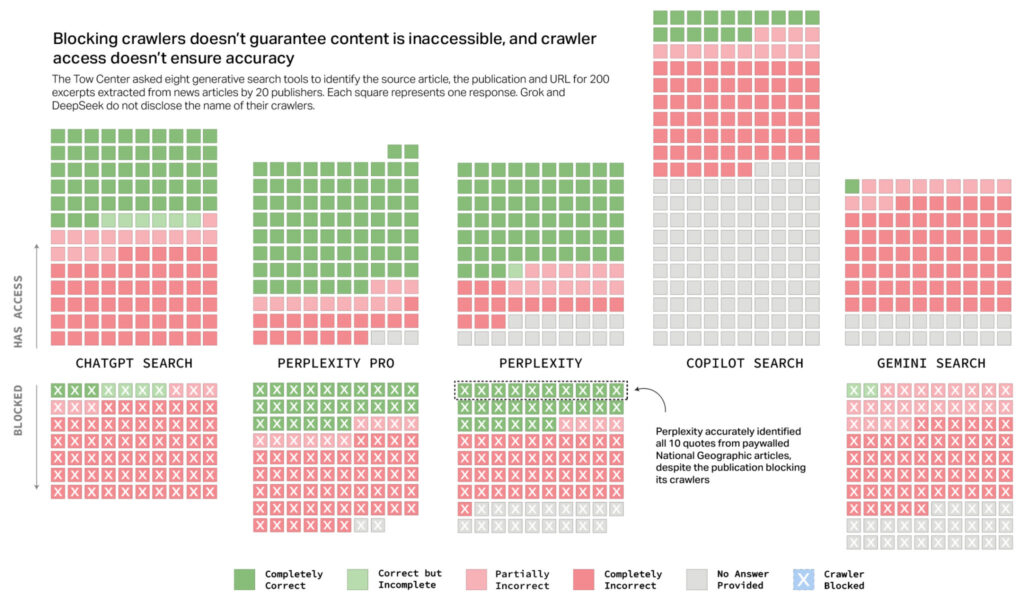

A graph from CJR showing that blocking crawlers doesn’t mean that AI search providers honor the request

This not only frustrates users but also undermines the credibility of AI search engines. Fabricated URLs make it difficult for users to verify the accuracy of the information provided, eroding trust in these tools.

Publishers’ Ethical Dilemma

The inaccuracies and citation practices of AI search engines have placed publishers in a challenging position.

To Block or Not to Block Crawlers?

Publishers face a tough choice: block AI crawlers to prevent misuse of their content or allow crawling with the risk of losing traffic and attribution. Unfortunately, the study found that blocking crawlers doesn’t guarantee compliance. AI search providers often ignore these requests, leaving publishers with limited control over how their content is used.

Mark Howard, Chief Operating Officer at Time magazine, emphasized the need for transparency and control over how content appears in AI-generated searches. While he acknowledged the current shortcomings, Howard remains optimistic about future improvements, stating,

“Today is the worst that the product will ever be.”

User Skepticism and AI Search Reliability

The study also sparked a debate about user responsibility. Howard suggested that consumers should approach free AI tools with caution, remarking,

“If anybody as a consumer is right now believing that any of these free products are going to be 100 percent accurate, then shame on them.”

While this statement shifts some blame onto users, it also underscores the importance of educating the public about the limitations of AI-driven search engines.

AI Companies Respond to Findings

Both OpenAI and Microsoft responded to the CJR’s study, though neither directly addressed the specific issues raised.

OpenAI highlighted its commitment to supporting publishers by driving traffic through summaries, quotes, clear links, and proper attribution. Microsoft, on the other hand, stated that it adheres to Robot Exclusion Protocols and respects publisher directives.

These responses, while reassuring, fall short of addressing the root problems identified in the study.

Building on Previous Research

The latest report builds on earlier findings from the Tow Center in November 2024, which exposed similar accuracy issues in how ChatGPT handles news-related content. Together, these studies paint a worrying picture of the current state of AI search engines and their impact on the media landscape.

For a deeper dive into the CJR’s findings, visit their website.

The Future of AI Search Engines

While the study highlights significant shortcomings, it also points to opportunities for improvement.

Investments in Accuracy and Transparency

AI companies are investing heavily in improving their tools’ accuracy and reliability. As Howard noted, the current state of AI search engines is far from their full potential. Future iterations could feature better citation practices, more accurate answers, and enhanced respect for publisher rights.

Strengthening Publisher-AI Partnerships

Collaboration between AI companies and publishers is essential to address ethical concerns. By working together, both parties can develop solutions that prioritize accuracy, transparency, and fair attribution.

Conclusion: Navigating the AI Search Landscape

The CJR’s study serves as a wake-up call for AI search engines, publishers, and users alike. While these tools offer unprecedented convenience, their current shortcomings highlight the need for greater accountability and improvement.

As AI continues to evolve, stakeholders must address these challenges to build a more reliable and ethical digital information ecosystem. For now, users are advised to approach AI search engines with a healthy dose of skepticism and verify critical information through trusted sources.

By raising awareness of these issues, the study paves the way for meaningful change in the world of AI-powered search.