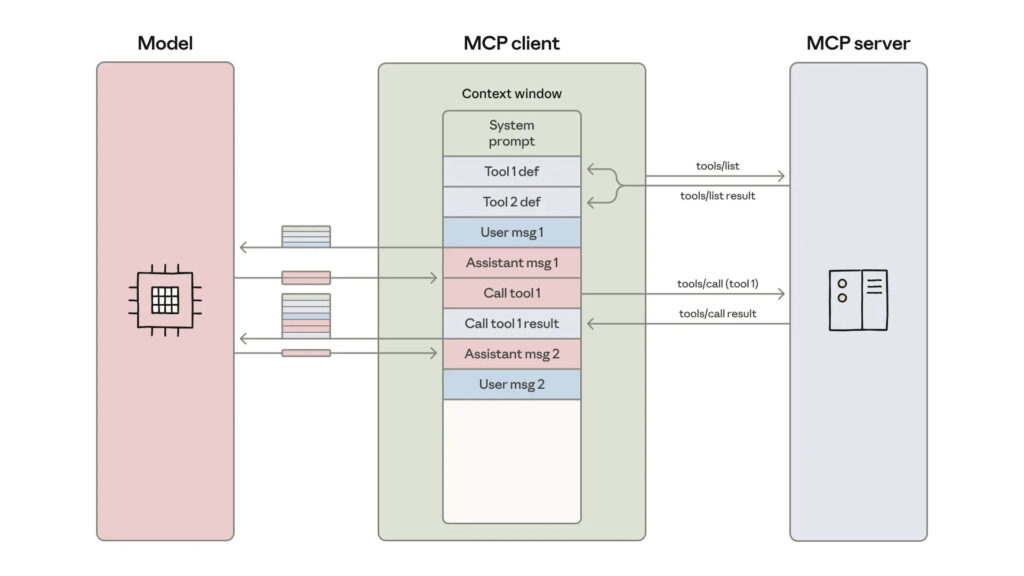

The current excitement around AI agents often glosses over a critical, unglamorous barrier: scalability. While connecting models to external tools (like via Anthropic’s own Model Context Protocol, or MCP) is the first step, it quickly creates a “token trap.” Anthropic’s recent engineering post, “Code execution with MCP,” is a refreshingly pragmatic analysis of this bottleneck and, more importantly, a detailed blueprint for a far more robust agent architecture.

This isn’t just an “update”; it’s a fundamental shift in philosophy. The article argues that to build truly efficient and powerful agents, we must evolve them from being simple “tool users” to sophisticated “code orchestrators.”

The “Token Trap” of Naive Agent Design

Anthropic identifies two core problems with the standard “direct tool calling” method, where an agent’s context is stuffed with tool definitions:

- The Definition Overload: As an agent connects to hundreds or thousands of tools, its context window is consumed by simply reading the manuals. Before the agent even sees a user’s prompt, it may have to process tens of thousands of tokens just defining its available functions. This is slow, expensive, and scales poorly.

- The “Dumb Pipe” Problem: The agent’s context becomes a high-traffic highway for intermediate data. The article’s example is perfect: fetching a large transcript from Google Drive, loading that entire transcript into context, only to then pass that entire transcript back out to a Salesforce update. The model isn’t using the data; it’s just a clumsy and expensive copy-paste machine.

The Solution: From Tool User to Code Orchestrator

Anthropic’s solution is both elegant and obvious in hindsight: leverage the LLM’s greatest strength (coding) to mitigate its greatest weakness (limited context).

Instead of giving the agent tools, give it a sandboxed coding environment and access to tools as code libraries. When a task is requested, the agent doesn’t “call a tool”; it writes a script to execute the task.

An agent needing to move a transcript would write code like this:

// The agent writes this code, which runs in a sandbox

import * as gdrive from './servers/google-drive';

import * as salesforce from './servers/salesforce';

// 1. Data is fetched into a variable in the sandbox,

// NOT into the model's context.

const transcript = (await gdrive.getDocument({ documentId: 'abc123' })).content;

// 2. The data is passed directly from one service to another.

await salesforce.updateRecord({

objectType: 'SalesMeeting',

recordId: '00Q5f000001abcXYZ',

data: { Notes: transcript }

});This architectural shift has profound, compounding benefits that the article details clearly.

The Compounding Benefits of a Code-First Architecture

By treating the agent as a programmer, we unlock several new capabilities simultaneously:

- Intelligent Data Handling: The two token traps are solved at once. The agent uses “progressive disclosure”—it only

readsthe definitions for the specific tools it needs for the script. More importantly, it can process data in the sandbox. It can filter a 10,000-row spreadsheet in code and only return the 5 relevant rows, saving an enormous amount of context. - Enabling True Autonomy: This model allows for complex, multi-step tasks. The agent can write

forloops,whileloops, and sophisticated error handling—logic that is clunky or impossible with simple tool chaining. It can also achieve state persistence by writing intermediate results to a file (fs.writeFile), allowing it to resume complex tasks. - Emergent “Skills”: The most forward-looking concept is the agent’s ability to save its own generated code as reusable functions. An agent that figures out how to “save a sheet as a CSV” can save that script as a new “skill” in its own file system, effectively building a high-level library of its own capabilities over time.

- The Enterprise Game-Changer: Privacy: In this model, the

transcriptdata never enters the model’s context window. It exists only as a variable within the secure sandbox. This is a massive win for privacy and data security, as PII and sensitive corporate data can be processed without ever being “seen” by the LLM, preventing accidental logging or leakage.

The Inescapable Trade-Off: Power vs. Peril

Anthropic is rightly transparent about the cost of this power: security. Running agent-generated code is inherently dangerous. This architecture is not a simple software update; it is an infrastructure challenge.

It requires a robust, secure, and resource-limited sandboxing environment to prevent the agent from performing malicious actions. This shifts the engineering burden from prompt engineering to complex security and infrastructure management.

Final Assessment

Anthropic’s article is a critical read for anyone in the AI space. It clearly defines the ceiling of our current “tool-calling” paradigm and provides a practical, code-first blueprint for the next generation of agents.

This approach fundamentally changes the nature of the agent. It’s no longer just a “brain” connected to “hands.” It’s a “brain” that can build its own hands—writing, saving, and composing new skills as needed. This is the architectural leap required to move from clever chatbots to truly autonomous systems.