Hey everyone! As an AI developer, I spend most of my time figuring out two things: how to build smarter models, and how to feed them better data. The truth is, in 2024, the power of our Large Language Models (LLMs) is often limited by the quality of the data we can give them. For anyone building a Retrieval-Augmented Generation (RAG) system, an AI agent, or just trying to fine-tune a model on fresh information, you’ve hit the same bottleneck I have: getting clean, relevant data off the live web.

The web is a mess. It’s a chaotic soup of JavaScript, dynamic content, ads, cookie banners, navbars, and footers. For years, our toolbox for this job has been some combination of requests, BeautifulSoup, Selenium, or Scrapy. These tools are great, but they were built for a different era. BeautifulSoup can’t handle JavaScript-rendered content. Scrapy is a powerful, complex framework, but it’s designed to extract structured data points (like prices) into a JSON or CSV file.

But what if your goal isn’t to get a list of prices, but to ingest the knowledge from a blog post? This is the new “RAG paradigm.” We don’t just need data; we need clean, semantic text.

This is where Crawl4AI comes in. It’s an open-source Python library built from the ground up for this new, AI-driven world. Its mission, as stated by its creators, is to “turn the web into clean, LLM ready Markdown for RAG, agents, and data pipelines”.

Today, I’m going to walk you through this powerful tool. This is the Crawl4AI tutorial I wish I had when I started. We’ll cover what it is, why it’s different, how to install it, and how to use it with practical, copy-paste-ready code. We’ll go from a simple crawl to handling dynamic content and even integrating it directly into a LangChain RAG pipeline.

What is Crawl4AI? Your “LLM-Friendly” Crawler

Crawl4AI is not just another web scraper. It’s a complete knowledge ingestion library that:

- Is Built for the Modern Web: It’s asynchronous from the ground up and uses a managed browser pool (under the hood, it uses Playwright) to handle JavaScript-rendered pages flawlessly.

- Generates “LLM-Ready” Output: This is its killer feature. Instead of just giving you raw HTML, its default output is clean, smart Markdown. It uses heuristic-based filtering to strip away the “noise”—ads, navbars, footers, etc.—leaving you with just the core, semantic content. It even preserves headings, tables, and code blocks.

- Has Dual Extraction Strategies: It understands that not all tasks are the same. It gives you the choice between:

- Traditional (Fast/Cheap) Extraction: Using CSS or XPath selectors for high-speed, precise extraction of structured data.

- AI-Powered (Flexible) Extraction: Using an

LLMExtractionStrategyto pull data from unstructured text using natural language prompts.

- Gives You Full Control: It’s not a black box. You get full control over proxies, cookies, sessions, and user scripts.

Crawl4AI vs. Traditional Tools: A Paradigm Shift

You might be thinking, “I already know BeautifulSoup and Scrapy. Why switch?” The answer lies in the paradigm shift from scraping-for-data to scraping-for-knowledge.

- BeautifulSoup (+Requests): This stack is great for simple, static HTML pages. But the moment you hit a site that loads content with JavaScript, you’re stuck.

BeautifulSoupis a parser, not a browser. It can’t click buttons, scroll, or wait for content to appear. Crawl4AI handles all of this out of the box. - Scrapy:

Scrapyis a powerful, enterprise-grade framework. It’s excellent for large-scale, long-running crawls that extract structured data into databases. But it has a steep learning curve 10 and is overkill for many AI tasks. Its primary “product” is a JSON/CSV file. Crawl4AI’s primary product is clean Markdown, which is exactly what you need to feed into a text-splitter and an embedding model for RAG.

Here’s a quick comparison to help you visualize where Crawl4AI fits:

| Feature | BeautifulSoup (+Requests) | Scrapy | Crawl4AI |

|---|---|---|---|

| Primary Use Case | Parsing static HTML | Large-scale data-point extraction | AI/RAG knowledge ingestion |

| JavaScript Rendering | No | Yes (with Splash/plugins) | Yes (Built-in) |

| Async Support | No (unless with httpx) | Yes (Twisted) | Yes (asyncio, modern) |

| AI-Native Output | No (gives raw HTML) | No (gives JSON/CSV) | Yes (Clean Markdown) |

| Learning Curve | Easy | Hard | Easy-to-Medium |

Crawl4AI isn’t here to replace Scrapy for every industrial use case. It’s here to solve a new problem: efficiently bridging the gap between the messy, dynamic web and the clean, semantic needs of LLMs.

Installation Guide: From Zero to Crawling in 5 Minutes

Let’s get this installed. Crawl4AI relies on a browser (like Chromium) being managed by Playwright, so the installation is a simple three-step process.

Prerequisites: You’ll need Python 3.10 or newer. Older versions like 3.8 or 3.9 may not work with the latest dependencies.

Step 1: Install the Package

First, install the main library using pip:

pip install -U crawl4aiIf you want to live on the edge and try the latest pre-release features, you can use the --pre flag.

Step 2: Critical Post-Installation Setup (Do Not Skip!)

This is the most important step. Crawl4AI needs to download and set up the browser binaries it controls. The library comes with a handy CLI command to do this for you:

crawl4ai-setupThis command will install the necessary Playwright dependencies, including the Chromium browser.

Step 3: Verify Your Installation

To make sure everything is working, run the built-in “doctor” command:

crawl4ai-doctorThis will check that Python, the package, and the browser binaries are all correctly installed and can communicate with each other.

Step 4: Manual Setup (If Things Go Wrong)

If crawl4ai-setup fails for any reason (like network issues or permissions), you can try to install the browser manually:

python -m playwright install --with-deps chromiumThis is the underlying command that the setup tool runs for you.

Optional Dependencies: Supercharging Your Install

The base install is minimal. If you need more features, you can install them as extras. The most common one you might want is support for synchronous crawling using Selenium:

pip install crawl4ai[sync]If you want all features, including those for PyTorch and transformers, you can go all-in:

pip install crawl4ai[all]Practical Usage: Your First Crawls with Crawl4AI

Time for the fun part. Let’s write some code.

Example 1: The “Hello, World!” – Basic Asynchronous Crawl

This first example will show you the core async workflow and the “magic” Markdown output.

import asyncio

from crawl4ai import AsyncWebCrawler

async def main():

print("Starting our first crawl...")

# AsyncWebCrawler manages the browser pool.

# The 'async with' block handles startup and shutdown.

async with AsyncWebCrawler() as crawler:

# arun() is the core command to crawl a single URL

result = await crawler.arun(

url="https://www.nbcnews.com/business",

)

# ALWAYS check if the crawl was successful

if result.success:

print(f"Crawl successful for: {result.url}")

print(f"Page title: {result.metadata.get('title')}")

# This is the "magic" - clean, AI-ready Markdown!

print("\n--- CLEANED MARKDOWN (Snippet) ---")

print(result.markdown[:1000] + "...")

else:

print(f"Crawl failed: {result.error_message}")

if __name__ == "__main__":

# This is the standard way to run an async main function

asyncio.run(main())Code Breakdown:

async def main()/asyncio.run(main()): Crawl4AI is built on Python’sasyncio. This is the standard boilerplate for running an async program.async with AsyncWebCrawler() as crawler: This is a context manager that gracefully starts and stops the headless browser pool.result = await crawler.arun(…): This is the main function you’ll use. It crawls the URL and returns a CrawlResult object.if result.success:: I can’t stress this enough. Web scraping is unreliable. Always check this boolean flag before trying to use the data.result.markdown: This is the prize. It’s not the raw HTML; it’s the cleaned semantic content of the page, ready for your LLM.

The CrawlResult Object: What’s Inside? The result object is packed with useful information:

result.markdown: The clean Markdown text.result.links: A dictionary of all links found, helpfully sorted into “internal” and “external” lists.result.media: A list of extracted images, audio, and video files.result.metadata: A dictionary with the page title, word count, language, etc.result.error_message: Ifresult.successisFalse, this field will tell you why.

Example 2: Structured Data Extraction (Two Ways)

This is where Crawl4AI’s flexibility shines. It understands that sometimes you want clean text (for RAG), and other times you want structured JSON (for data analysis). It provides two ways to get that JSON, bridging the “old” and “new” scraping paradigms.

Method A: The “Fast & Precise” Way (No LLM) with JsonCssExtractionStrategy

Use Case: When you’re scraping a page with a clear, repeating HTML structure (like an e-commerce grid, a price list, or a “Top 10” article) and want fast, cheap, and reliable JSON output.

This strategy uses CSS selectors, just like you would with BeautifulSoup or Scrapy.

import asyncio

import json

from crawl4ai import (

AsyncWebCrawler,

CrawlerRunConfig,

CacheMode,

JsonCssExtractionStrategy # Note this special import!

)

async def extract_with_css():

print("Extracting structured data with CSS selectors...")

# 1. Define your schema based on the website's CSS.

# This schema targets a specific, real-world dynamic page.

schema = {

# 'baseSelector' is the CSS selector for each *repeating item*.

"baseSelector": "section.charge-methodology.w-tab-content > div",

# 'fields' are the data points *inside* each base element.

"fields": [

{ "name": "section_title", "selector": "h3.heading-50", "type": "text" },

{ "name": "course_name", "selector": ".text-block-93", "type": "text" },

{ "name": "course_icon", "selector": ".image-92", "type": "attribute", "attribute": "src" }

]

}

# 2. Configure the crawler to *use* this extraction strategy.

# We pass this config to the arun() method.

crawler_config = CrawlerRunConfig(

cache_mode=CacheMode.BYPASS, # Don't cache for this demo

extraction_strategy=JsonCssExtractionStrategy(schema=schema)

)

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(

url="https://www.kidocode.com/degrees/technology",

config=crawler_config

)

if result.success:

# The output is now in 'result.extracted_content' as a JSON string

print("Crawl successful! Extracted JSON data:")

data = json.loads(result.extracted_content)

print(f"Successfully extracted {len(data)} items.")

print(json.dumps(data, indent=2)) # Print the first item

else:

print(f"Crawl failed: {result.error_message}")

if __name__ == "__main__":

asyncio.run(extract_with_css())Code Breakdown:

JsonCssExtractionStrategy: This is the key. We import this strategy class.schema: This dictionary is the core.baseSelectortells it what “item” to loop over (e.g., eachdivin a product list).fieldsdefines the data to pull from within that item.CrawlerRunConfig: We create a configuration object and pass ourextraction_strategyto it.result.extracted_content: When you use an extraction strategy, the output is no longer inresult.markdown. It’s now inresult.extracted_contentas a JSON string.

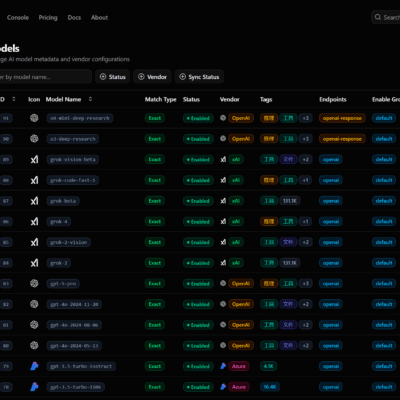

Method B: The “Flexible” Way (AI-Powered) with LLMExtractionStrategy

Use Case: When the data is unstructured, the HTML is a nightmare, or you want to extract concepts, not just text from a tag. For example: “Summarize the main argument of this article” or “Extract the names of all people mentioned.”

This strategy sends the cleaned content to an LLM (like GPT-4o) and asks it to fill out a schema for you.

import os

import asyncio

import json

from crawl4ai import (

AsyncWebCrawler,

CrawlerRunConfig,

CacheMode,

LLMExtractionStrategy, # The AI-powered strategy

LLMConfig

)

from pydantic import BaseModel, Field

# Set your API key

# export OPENAI_API_KEY='your_api_key_here'

# 1. Define the *desired output* schema using Pydantic

class OpenAIModelFee(BaseModel):

model_name: str = Field(..., description="Name of the OpenAI model")

input_fee: str = Field(..., description="Fee for input tokens (e.g., US$10.00 / 1M tokens)")

output_fee: str = Field(..., description="Fee for output tokens (e.g., US$30.00 / 1M tokens)")

async def extract_with_llm():

print("Extracting structured data with an LLM...")

# 2. Define the extraction strategy

run_config = CrawlerRunConfig(

extraction_strategy=LLMExtractionStrategy(

llm_config=LLMConfig(

# You can use any provider Litellm supports!

provider="openai/gpt-4o",

api_token=os.getenv('OPENAI_API_KEY')

),

schema=OpenAIModelFee.schema(),

extraction_type="schema",

instruction="From the crawled content, extract all mentioned model names along with their fees for input and output tokens."

),

cache_mode=CacheMode.BYPASS,

)

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(

url='https://openai.com/api/pricing/', # The target URL

config=run_config

)

if result.success:

print("Crawl successful! Extracted JSON data from LLM:")

print(result.extracted_content)

else:

print(f"Crawl failed: {result.error_message}")

if __name__ == "__main__":

asyncio.run(extract_with_llm())Code Breakdown:

Pydantic.BaseModel: This is a best practice for defining your desired output. You tell the LLM exactly what structure you want.LLMExtractionStrategy: This is the core. We configure it with:llm_config: This tells it which model to use. It supports any provider that Litellm supports (e.g.,ollama/qwen2,anthropic/claude-3-sonnet), not just OpenAI.schema: We pass the Pydantic schema we defined.instruction: A natural language prompt telling the LLM what to do.

This dual-strategy approach is brilliant. It lets you make the right engineering trade-off: use fast/cheap CSS selectors when you can, and fall back to a powerful LLM when you must.

Example 3: Taming the Dynamic Web (JavaScript)

This is the problem that makes most beginner scrapers give up. How do you scrape content that only appears after you scroll, click a “Load More” button, or interact with a tab?

Crawl4AI handles this by giving you direct control over the browser.

import asyncio

import json

from crawl4ai import (

AsyncWebCrawler,

BrowserConfig, # Note: Browser-level config

CrawlerRunConfig,

CacheMode

)

async def crawl_dynamic_page():

print("Crawling a dynamic page that requires JS interaction...")

# 1. Configure the browser to execute JavaScript

browser_config = BrowserConfig(

headless=True,

java_script_enabled=True # Make sure JS is turned on

)

# 2. Define JavaScript code to run *on the page*

# This code will click all the tabs on the target page

# to force their content to load into the DOM.

js_to_run = """

(async () => {

const tabs = document.querySelectorAll("section.charge-methodology.tabs-menu-3 > div");

for(let tab of tabs) {

tab.scrollIntoView();

tab.click();

// Wait for the tab content to (hopefully) load

await new Promise(r => setTimeout(r, 500));

}

})();

"""

# 3. Configure the run to use the JS code and *wait*

crawler_config = CrawlerRunConfig(

cache_mode=CacheMode.BYPASS,

js_code=[js_to_run], # A list of JS snippets to execute

# This is the *correct* way to wait.

# It waits for a JS expression to be true.

wait_for="js:() => document.querySelectorAll('section.charge-methodology.w-tab-content > div').length > 0"

)

async with AsyncWebCrawler(config=browser_config) as crawler:

result = await crawler.arun(

url="https://www.kidocode.com/degrees/technology", # A real dynamic page

config=crawler_config

)

if result.success:

print("Crawl successful! Markdown now contains dynamic content:")

print(result.markdown)

else:

print(f"Crawl failed: {result.error_message}")

if __name__ == "__main__":

asyncio.run(crawl_dynamic_page())Code Breakdown:

BrowserConfig(java_script_enabled=True): This is passed to theAsyncWebCrawlerconstructor and sets the global browser state.js_code=[…]: This is the real power. You pass a list of JavaScript strings toCrawlerRunConfig. Crawl4AI will execute these in the page’s console, letting you simulate clicks, scrolls, or any other user interaction.wait_for="…": This is the most important part of dynamic scraping. Do not usetime.sleep()! Instead, give the crawler a condition to wait for. This can be a CSS selector ("css:.my-element") or, as shown here, a JavaScript expression that must returntrue("js:() => window.loaded === true"). The crawl won’t finish until this condition is met or it times out.

Essential: Robust Error Handling

I’ve shown you the happy path. But in production, pages 404, servers time out, and networks fail. A “pro-level” guide must show you how to handle this. A “tutorial” shows code that works. An “expert guide” shows how to handle code that fails. Scraping is inherently unreliable. You must anticipate failure.

Here is a robust pattern you can adapt for crawling multiple URLs.

import asyncio

from crawl4ai import AsyncWebCrawler, CrawlerRunConfig

async def robust_crawl(url: str, crawler: AsyncWebCrawler):

"""

A robust wrapper for a single crawl, handling both

system-level and crawl-level errors.

"""

try:

# You can set a per-page timeout

config = CrawlerRunConfig(page_timeout=15000) # 15 seconds

result = await crawler.arun(url, config=config)

if result.success:

print(f" {url}")

return result.markdown

else:

# Crawler-level error (e.g., 404, 500, page blocked)

print(f" {url}: {result.error_message}")

return None

except asyncio.TimeoutError:

# Operation timed out [20]

print(f" {url}: The crawl operation took too long.")

return None

except Exception as e:

# Other exceptions (network, setup, strategy error, etc.)

# See [21] for more specific errors you can catch

print(f" {url}: {e}")

return None

async def main():

urls_to_crawl = [

"https://www.nbcnews.com/business",

"https://this-site-does-not-exist.example.com",

"https://httpbin.org/status/404"

]

print(f"Starting robust batch crawl for {len(urls_to_crawl)} URLs...")

async with AsyncWebCrawler() as crawler:

# Create a list of 'tasks' to run concurrently

tasks = [robust_crawl(url, crawler) for url in urls_to_crawl]

# asyncio.gather runs all tasks in parallel

results = await asyncio.gather(*tasks)

print("\n--- CRAWL COMPLETE ---")

successful_crawls = 0

for res in results:

if res:

successful_crawls += 1

print(f"Successfully crawled {successful_crawls}/{len(urls_to_crawl)} pages.")

if __name__ == "__main__":

asyncio.run(main())This pattern is robust because it separates two types of failures:

try…except: Catches system-level or network-level failures (like aTimeoutError).if result.success:: Catches crawl-level failures (like the server returning a 404 or 503 error).

Best Practices for Production-Ready Crawling

Running a one-off script is easy. Running a production-scale crawler requires you to be a good web citizen and optimize for performance.

Ethical & Responsible Crawling (A Feature, Not an Afterthought)

The web runs on shared trust. Don’t abuse it. The Crawl4AI community has had these discussions, and as a result, the library gives you the features to be ethical.

1. Respect robots.txt: This is the web’s “do-not-knock” list. It’s a file where websites state which parts they don’t want bots to crawl. By default, Crawl4AI ignores this (check_robots_txt=False). You should opt-in to being polite.

# Be a good citizen!

config = CrawlerRunConfig(check_robots_txt=True)When enabled, Crawl4AI will check the robots.txt file (and cache it in SQLite for efficiency) and will not crawl disallowed URLs.

2. Set a Clear User-Agent: Identify your bot so system admins know who you are.

# Tell them who you are!

config = BrowserConfig(

user_agent="My-AI-Research-Bot/1.0 (mailto:[email protected])"

)3. Manage Rate Limits: Don’t hammer a server with hundreds of concurrent requests. This is a great way to get your IP banned. When crawling many URLs, use the built-in concurrency limiter.

# Limit to 3 parallel requests

config = CrawlerRunConfig(semaphore_count=3)

#...then use arun_many()

# results = await crawler.arun_many(urls, config=config)4. Read the Terms of Service: robots.txt is a request, not a law. The website’s Terms of Service is the legally binding document. Always check it before scraping commercially.

Performance Optimization

- Use arun_many() for Batches: Don’t use a for loop with await crawler.arun(). This runs your crawls one by one. To run them in parallel (up to your semaphore_count), use arun_many():

urls = ["url1.com", "url2.com", "url3.com"]

config = CrawlerRunConfig(semaphore_count=5) # 5 at a time

async with AsyncWebCrawler() as crawler:

results = await crawler.arun_many(urls, config=config)

for res in results:

if res.success:

print(f"Got: {res.url}")

else:

print(f"Failed: {res.url} ({res.error_message})")- Smart Caching: If you’re re-running a crawl, don’t re-fetch pages you already have.

CacheMode.ENABLED: Use the cache.CacheMode.BYPASS: Default. Always get fresh content.

- Use Proxies: For large-scale crawling, you’ll need to rotate your IP address. Crawl4AI supports this via

BrowserConfig. You’ll typically get these from a commercial provider.

proxy_config = {

"server": "http://my.proxy.com:8080",

"username": "myuser",

"password": "mypassword"

}

browser_config = BrowserConfig(proxy_config=proxy_config)The “Killer App”: Integrating Crawl4AI with LangChain for RAG

This is what we’ve been building towards. How do we connect our new, powerful crawler directly into a RAG pipeline?

The community has been asking for this, and the answer is to build a custom LangChain DocumentLoader. This is the bridge that feeds data into the LangChain ecosystem.

Here is the complete, reusable code to create a Crawl4AILoader for your own projects.

import asyncio

from crawl4ai import AsyncWebCrawler, BrowserConfig, CrawlerRunConfig

from langchain.docstore.document import Document

from langchain.document_loaders.base import BaseLoader

from typing import List, Optional

# 1. The Custom LangChain Document Loader

class Crawl4AILoader(BaseLoader):

"""

A LangChain DocumentLoader that uses Crawl4AI to fetch

and parse web content into clean, RAG-ready Markdown.

Based on the community tutorials from [30] and.[30]

"""

def __init__(self,

url: str,

browser_config: Optional = None,

run_config: Optional = None):

"""

Initialize the loader with a URL and optional Crawl4AI configs.

"""

self.url = url

self.browser_config = browser_config or BrowserConfig()

self.run_config = run_config or CrawlerRunConfig()

# IMPORTANT: For RAG, we want Markdown, not structured JSON.

# Ensure no extraction_strategy is set, as it would override

# the default markdown output.

if self.run_config.extraction_strategy:

print("Warning: Removing extraction_strategy to ensure Markdown output for LangChain.")

self.run_config.extraction_strategy = None

async def _crawl(self) -> Document:

"""Helper to run the async crawl."""

print(f"Crawl4AI: Loading document from {self.url}")

# We create a new crawler each time for simplicity.

# For bulk loading, you could reuse a single crawler instance.

async with AsyncWebCrawler(config=self.browser_config) as crawler:

result = await crawler.arun(self.url, config=self.run_config)

if not result.success:

# Still return a document, but with error info

return Document(

page_content="",

metadata={

"source": self.url,

"error": result.error_message

}

)

# This is the bridge!

# Package the result into a LangChain Document.

return Document(

page_content=result.markdown,

metadata={

"source": self.url,

"title": result.metadata.get("title", ""),

"word_count": result.metadata.get("word_count", 0),

"language": result.metadata.get("language", "")

}

)

def load(self) -> List:

"""Sync load method (required by BaseLoader)."""

return asyncio.run(self.aload())

async def aload(self) -> List:

"""Async load method."""

doc = await self._crawl()

return [doc]

# ---

# 2. How to use it in your RAG pipeline

# (This part is a conceptual demo)

# ---

async def main_rag_pipeline():

# --- STEP 1: LOAD ---

# Use our new loader to get data from a dynamic page.

# We'll enable JS and wait for a selector.

print("--- 1. LOADING DOCUMENT ---")

loader = Crawl4AILoader(

url="https://crawl4ai.com",

run_config=CrawlerRunConfig(

java_script_enabled=True,

wait_for="css:h1"

)

)

# Load the document

documents = await loader.aload()

if not documents.page_content:

print(f"Failed to load document: {documents.metadata['error']}")

return

print(f"Source: {documents.metadata['source']}")

print(f"Title: {documents.metadata['title']}")

print(f"Content (snippet): {documents.page_content[:200]}...")

# --- STEP 2: SPLIT, EMBED, STORE ---

# Now you just use standard LangChain!

print("\n--- 2. SPLITTING, EMBEDDING, STORING ---")

# text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

# splits = text_splitter.split_documents(documents)

# print(f"Document split into {len(splits)} chunks.")

# embeddings = OpenAIEmbeddings()

# vectorstore = FAISS.from_documents(splits, embeddings)

# print("Vector store created.")

# --- STEP 3: RETRIEVE & GENERATE ---

# retriever = vectorstore.as_retriever()

# prompt =...

# llm =...

# chain = (

# {"context": retriever, "question": RunnablePassthrough()}

# | prompt

# | llm

# )

# print(chain.invoke("What is Crawl4AI?"))

print("--- 3. DOCUMENT IS READY FOR RAG PIPELINE ---")

if __name__ == "__main__":

asyncio.run(main_rag_pipeline())This Crawl4AILoader class is your reusable bridge. It takes a URL and your Crawl4AI configs, runs the crawl, and packages the clean result.markdown and result.metadata directly into the Document object that LangChain expects.27 From there, you’re just using standard LangChain components to build your RAG app.

Conclusion: Join the Community and Start Building

Crawl4AI is, in my opinion, one of the most exciting open-source tools to emerge for AI developers. It’s not just another scraper. It’s a modern, AI-first knowledge ingestion tool that solves the real bottleneck in RAG and agent development: getting clean, semantic content from the messy, dynamic web.11

We’ve covered a ton:

- Why it’s a “paradigm shift” from tools like BeautifulSoup and Scrapy.

- How to install it (including the critical

crawl4ai-setupstep). - Practical code for basic crawls, dual-mode structured extraction (CSS vs. LLM), and handling complex JavaScript.

- Best practices for ethical crawling (

robots.txt, rate limits) and performance. - The “killer app”: A complete, reusable

Crawl4AILoaderto plug it directly into your LangChain RAG pipelines.

The library is growing fast, with new features like self-hosting platforms with real-time monitoring and deep-crawling capabilities (BFS/DFS).

My advice? Stop fighting with requests and regex. Give Crawl4AI a try.

Go check out the Crawl4AI GitHub repository, give it a star, and read through the official documentation. The project is active and welcoming contributions.