The AI landscape is evolving rapidly, and Google’s introduction of Gemini 3 Pro marks a significant milestone. Released in preview on November 18, 2025, this model is designed to handle complex reasoning, true multimodal tasks, and long-horizon agentic workflows.

For automation engineers using n8n, Gemini 3 Pro offers a powerful new “brain” for building sophisticated AI agents. In this article, we will explore the model’s capabilities based on real-world benchmarks and demonstrate how to integrate it into n8n workflows for tasks ranging from image analysis to automated coding.

Gemini 3 Pro: A Leap in AI Capabilities

Gemini 3 Pro is not just an incremental update; it is engineered to be Google’s smartest model, capable of understanding and generating content across text, images, audio, and code.

Key Technical Highlights

- Multimodal Reasoning: In benchmarks like MMMU-Pro (Multimodal understanding and reasoning), Gemini 3 Pro achieved a score of 81.0%, significantly outperforming Gemini 2.5 Pro (68.0%) and Claude Sonnet 4.5 (68.0%). This makes it exceptionally suited for workflows that require processing mixed media inputs.

- Visual Understanding: The model’s ability to “see” and interpret screens is unmatched. In the ScreenSpot-Pro benchmark, Gemini 3 Pro scored 72.7%, a massive leap over Claude Sonnet 4.5 (36.2%) and GPT-5.1 (3.5%).

- Massive Context Window: Gemini 3 Pro supports a 1 million token input and 64k token output context window. This allows it to ingest and reason over vast amounts of data, such as entire financial reports or codebases, without losing context.

- Agentic Performance: In the Vending-Bench 2 test, which measures long-horizon agentic tasks, Gemini 3 Pro generated a mean net worth of $5,478.16, compared to just $573.64 for Gemini 2.5 Pro, demonstrating superior capability in managing complex, multi-step processes.

Pricing

As a premium model, the pricing reflects its advanced capabilities. Input tokens cost $2 per 1M tokens (for prompts < 200k) or $4 (for prompts > 200k). Output tokens are priced at $12 per 1M (< 200k) or $18 (> 200k). While higher than Gemini 2.5 Pro, the cost is justified for tasks requiring high-level reasoning.

4 Ways to Integrate Gemini 3 Pro with n8n

Based on the provided workflow configurations, there are several effective methods to connect Gemini 3 Pro within n8n:

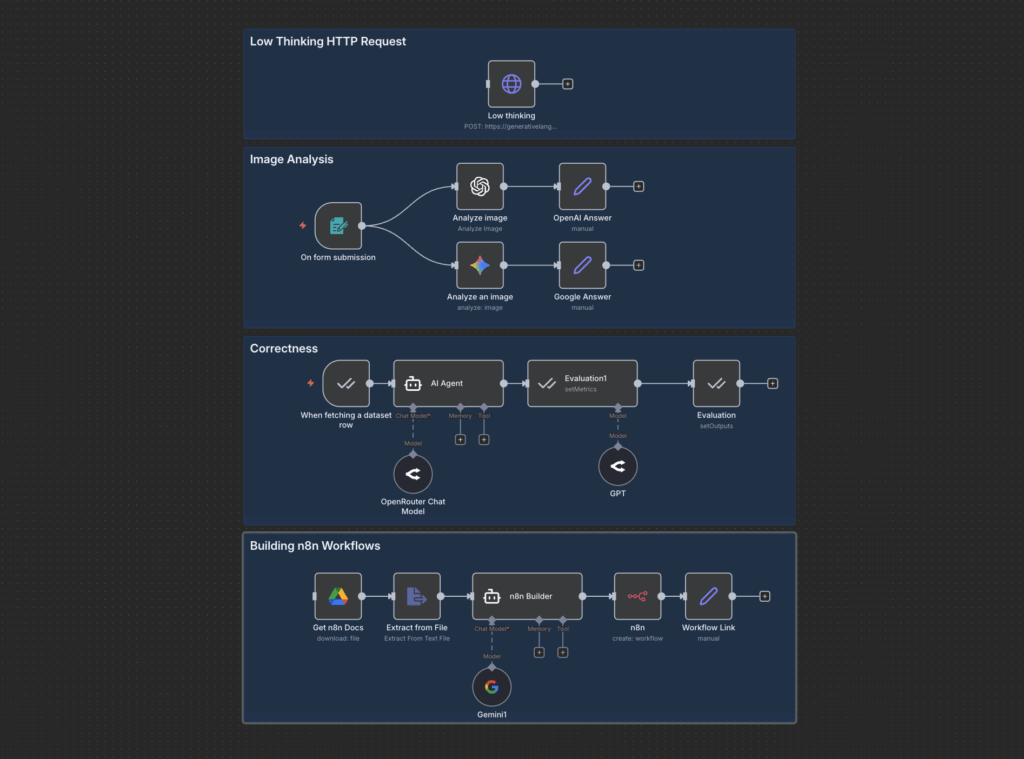

Download workflow n8n: https://romhub.io/n8n/Gemini_3_Pro1. The Native Google Gemini Node

For standard tasks, the native Google Gemini node in n8n is the easiest entry point. It supports operations like “Analyze an image”. You can input binary data or image URLs and prompt the model to describe details, such as identifying specific damage in a photograph.

2. AI Agent with Chat Model

For building conversational agents, you can use the AI Agent node connected to a Google Gemini Chat Model. In the example workflow, an “n8n Builder” agent uses this setup to translate natural language user requests into functional n8n workflow JSON code.

3. OpenRouter Integration

To manage multiple LLMs centrally, OpenRouter is a robust alternative. The workflow demonstrates connecting an OpenRouter Chat Model node to an AI Agent. This allows you to switch between models like Gemini 3 Pro or others without reconfiguring credentials, provided you have the appropriate API key.

4. Direct HTTP Request (Advanced Control)

To leverage the latest features not yet exposed in native nodes, such as the “Thinking Config,” a direct HTTP Request is necessary.

The workflow includes a “Low thinking” node that posts directly to the Gemini API URL: https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-preview:generateContent

This method allows you to pass specific configuration bodies, such as:

"generationConfig": {

"thinkingConfig": {

"thinkingLevel": "low"

}

}This level of control is essential for optimizing latency and reasoning depth.

Practical Use Cases in n8n

The provided workflow illustrates several powerful applications for Gemini 3 Pro:

1. Deep Visual Analysis

The workflow compares OpenAI and Gemini 3 Pro in an “Analyze image” task. By feeding an image into the node and asking it to “describe in detail the type of damage”, Gemini 3 Pro leverages its superior visual reasoning capabilities (evidenced by its high scores in OmniDocBench and Video-MMMU) to provide granular assessments that go beyond simple object detection.

2. Long-Context Document Reasoning

With its 1M token context window, Gemini 3 Pro can process extensive documents. The workflow features an Evaluation node designed to fetch and analyze dataset rows, likely containing large text blocks like Apple’s 10-K financial report. The model can extract specific financial figures or answer complex questions from hundreds of pages of text with high accuracy.

3. Automated Workflow Generation

Gemini 3 Pro’s coding proficiency (Elo rating of 2,439 on LiveCodeBench Pro) makes it an excellent architect for automation. The n8n Builder agent in the workflow takes a user’s chat input and generates a complete, syntactically valid JSON object for a new n8n workflow. It intelligently selects nodes, defines parameters, and maps connections, turning plain English instructions into deployable automation.

The “Thought Signatures” Challenge

When using Gemini 3 Pro for Tool Calling (Function Calling) via API, developers face a specific technical requirement regarding “Thought Signatures.”

Gemini’s “thinking” process generates an internal chain of thought. To maintain consistency and context during multi-turn tool executions, the API requires these “thought signatures” to be passed back in subsequent requests. Currently, standard n8n nodes may not automatically handle this field, potentially causing errors when an agent tries to execute a tool.

Solution: Until native support is added, the HTTP Request node approach (as shown in the “Low thinking” node) is the reliable workaround. It allows you to manually capture and include the thought signature in your JSON payload, ensuring the model maintains its reasoning state throughout the interaction.

Conclusion

Gemini 3 Pro represents a paradigm shift in what’s possible with AI automation. By combining its multimodal reasoning, massive context window, and agentic capabilities with the flexibility of n8n, developers can build systems that truly “understand” the world—from analyzing visual data to autonomously writing code. While early integration requires some manual configuration via HTTP requests, the potential for innovation is immense.

Start experimenting with Gemini 3 Pro in your n8n workflows today to unlock the next level of intelligent automation.