What We Learned From Testing Them Inside Loova

AI video tools are no longer experimental. By 2026, most marketing teams, creators, and businesses have already accepted AI video generation as part of their content stack. The real challenge is no longer adoption. The challenge is operational efficiency.

Teams are producing more videos than ever. Yet many still feel slower, more fragmented, and more overwhelmed than before.

The reason is simple.

Most teams are optimizing for output, not workflow.

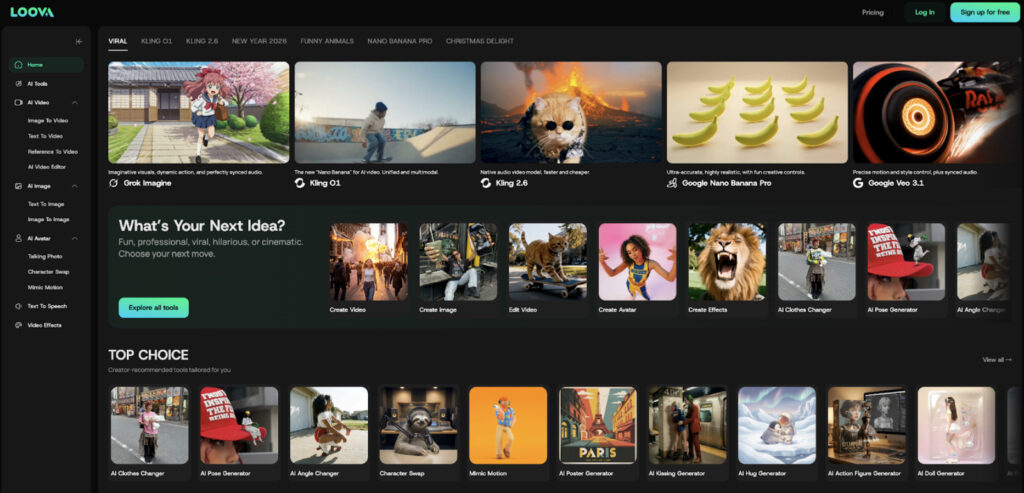

This article explores how modern AI video models actually change content workflows in 2026, based on hands-on testing inside Loova, a platform that integrates multiple leading AI video models into a single environment.

The findings are not about which model looks best. They are about how model choice reshapes speed, cost, iteration, and decision making.

The Hidden Cost of Fragmented AI Video Tools

Most teams did not adopt AI video tools all at once.

They added them gradually:

- One tool for text to video

- One tool for image animation

- One tool for editing

- One tool for voiceovers

- One tool for effects…

On paper, this seems flexible. In practice, it creates friction.

Each additional tool adds:

- Context switching

- File transfers

- Version confusion

- Decision fatigue

AI video generation becomes faster, but the workflow becomes slower. This is the paradox many teams face in 2026. AI removes production barriers but introduces operational complexity.

Why Workflow Matters More Than Output Quality

In isolation, many AI video models perform well.

The problem emerges when teams need to:

- Test ideas quickly

- Compare formats

- Repurpose content

- Localize messaging

- Scale campaigns

At this point, workflow efficiency becomes more important than visual perfection. A slightly less polished video that ships today often outperforms a perfect video that ships next week.

This is where unified AI platforms like Loova change the equation.

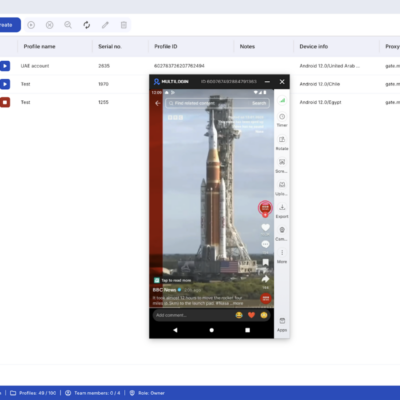

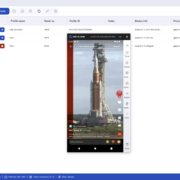

Loova as a Testing Environment, Not Just a Tool

Loova is often described as an all-in-one AI video platform. That description is accurate, but incomplete.

Its real value lies in workflow consolidation.

By integrating multiple AI video models in one place, Loova allows teams to:

- Test different models without switching tools

- Keep inputs consistent across models

- Compare outputs side by side

- Reduce setup time for experimentation

This makes it possible to evaluate AI video models based on operational impact, not just aesthetics.

What We Tested and Why It Matters

Inside Loova, we tested several leading AI video models across common real-world scenarios:

- Social media short form

- Product demos

- Explainer videos

- Marketing ads

- Creative visuals

Instead of asking “Which model is best”, we asked:

- How fast can teams iterate

- How predictable is output

- How much manual intervention is required

- How easily content can be repurposed

The results reveal why some models scale better in real workflows than others.

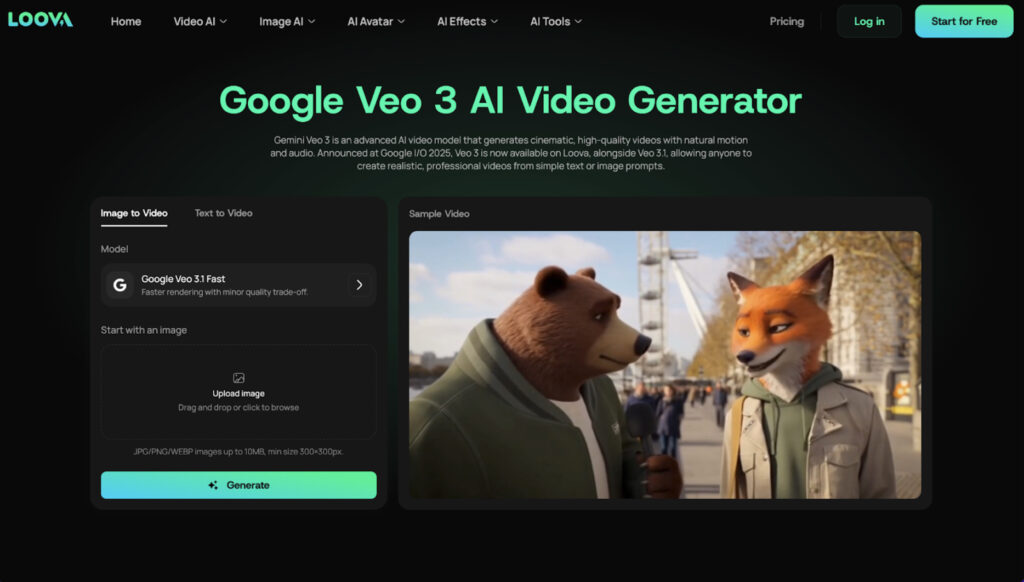

Model Category 1: Full Video Generation Models

Strengths

Models such as Veo 3.1 and Sora 2 Pro focus on generating complete videos from text or structured input.

They excel at:

- Narrative coherence

- Scene structure

- Professional tone

- Long form content

Workflow Impact

In Loova, these models significantly reduce:

- Script to video time

- Editing handoffs

- Production coordination

They are ideal for:

- Marketing teams

- Corporate communication

- Educational content

Limitations

However, they often require:

- Clear upfront structure

- More planning before generation

This makes them less flexible for rapid experimentation.

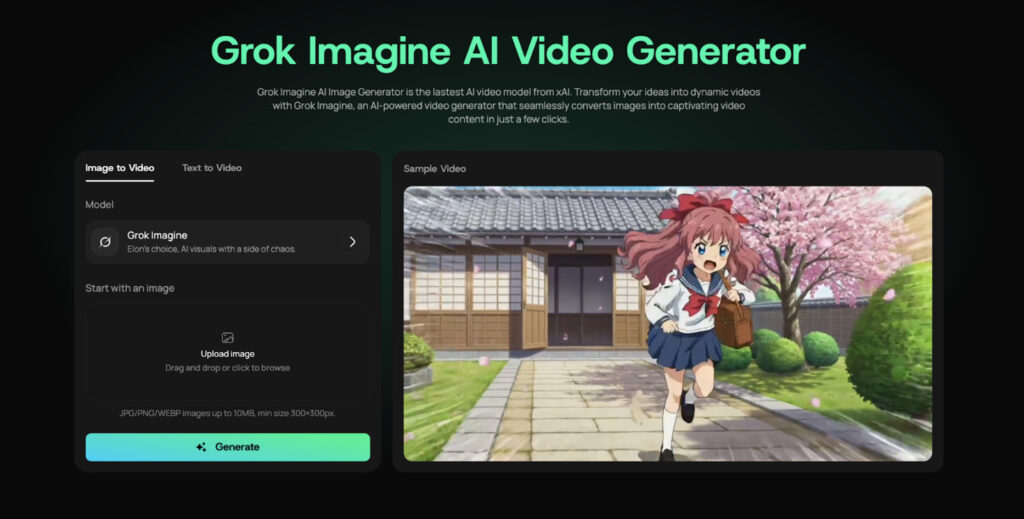

Model Category 2: Image to Video and Visual Expansion Models

Strengths

Creative image to video models such as Grok Imagine excel at turning static visuals into motion.

They support:

- Fast ideation

- Visual experimentation

- Trend driven content

Workflow Impact

Inside Loova, these models:

- Enable quick iteration

- Reduce creative bottlenecks

- Support daily content output

They work well for:

- Social media teams

- Influencers

- Content experiments

Limitations

They are less suitable for:

- Structured messaging

- Long form narratives

Using them outside their intended context often leads to inconsistent results.

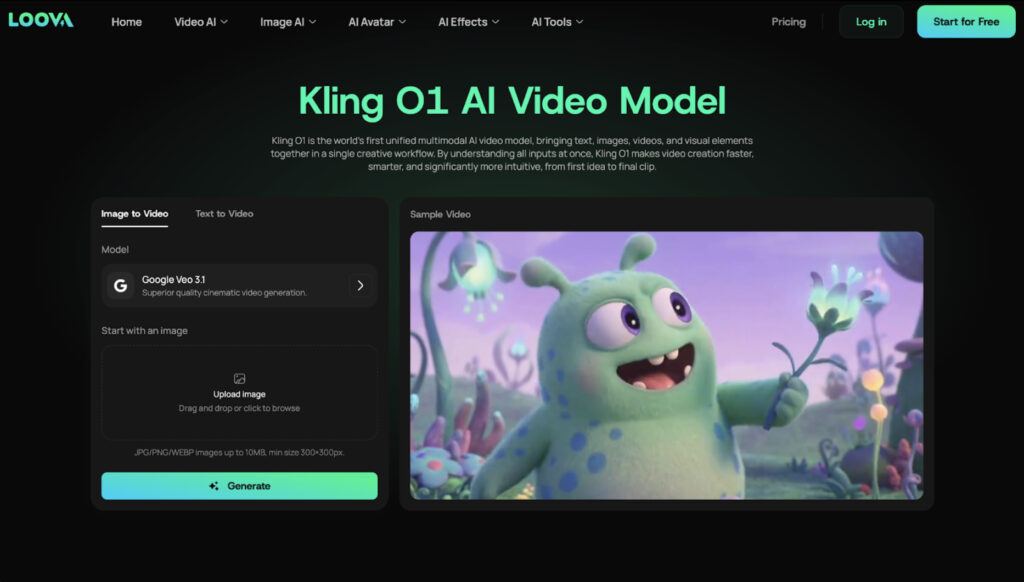

Model Category 3: Editing and Enhancement Models

Strengths

Models like Kling O1 and Kling 2.6 focus on editing efficiency.

They automate:

- Scene trimming

- Color correction

- Transitions

- Visual cleanup

Workflow Impact

These models reduce:

- Manual editing time

- Skill dependency

- Revision cycles

Inside Loova, they function as workflow accelerators, especially for teams handling large content volumes.

Limitations

They do not generate ideas. They optimize execution.

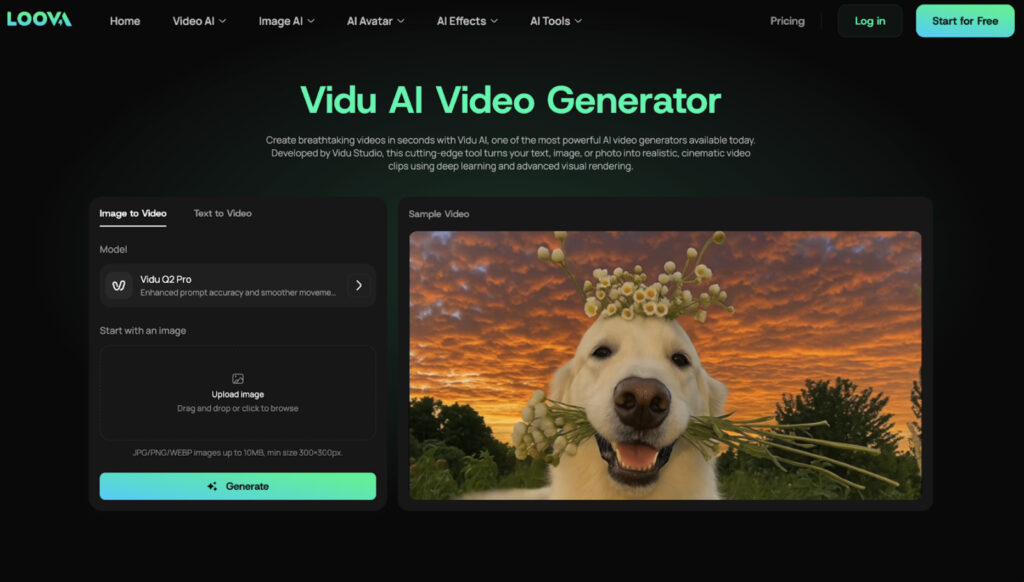

Model Category 4: Personalization and Character Models

Strengths

Models like Vidu Q2 Pro enable:

- Character animation

- Personalized video variations

Workflow Impact

Personalization shifts from manual production to automated variation.

In Loova, this allows teams to:

- Create multiple versions quickly

- Test personalized messaging

- Maintain visual consistency

The Real Bottleneck Is Decision Making

After testing these models, one insight stood out. The biggest bottleneck in AI video workflows is not generation speed. It is decision making.

When teams must:

- Choose tools

- Export files

- Reimport assets

- Rebuild prompts

They hesitate. Iteration slows. Ideas die early.

By keeping everything in one environment, Loova reduces decision friction. Teams test more because testing costs less.

Why Unified Platforms Win in 2026

In 2026, the competitive advantage is not access to AI models. Many tools offer that.

The advantage lies in:

- How fast teams learn what works

- How easily they discard what does not

- How cheaply they iterate

Unified platforms shorten the feedback loop between idea and result.

This is why workflow centric AI platforms outperform single purpose tools at scale.

A Practical Workflow for AI Video Teams

Based on testing, an effective workflow looks like this:

- Use fast visual models for idea exploration

- Validate concepts with lightweight outputs

- Switch to structured models for polished production

- Use editing models for refinement

- Use voice models for localization

- Repurpose across channels

Loova enables this entire cycle without breaking context.

What This Means for the Future of AI Video

AI video models will continue to improve. But the real evolution is happening at the workflow level.

Teams that treat AI as a set of disconnected tools will struggle with complexity. Teams that treat AI as an integrated system will move faster, test more, and adapt better.

Conclusion

In 2026, AI video success is no longer about finding the single best model. It is about building workflows that reduce friction and accelerate decisions.

Testing AI video models inside a unified platform like Loova reveals a critical truth. The best model is the one that fits your workflow, not the one with the most features.

When tools disappear into the background, creativity and execution move forward. That is where the real scale begins.