Artificial intelligence is making waves everywhere, and even Raspberry Pi isn’t left behind. The Raspberry Pi Foundation recently introduced an AI Hat+ add-on, but you don’t need extra hardware to run AI models on Raspberry Pi. With just your Raspberry Pi’s CPU, you can deploy lightweight AI models and get decent results, even if token generation is slow. In this guide, we’ll show you step-by-step how to run AI models on Raspberry Pi, making the most of its limited resources.

System Requirements for Running AI on Raspberry Pi

Before diving in, ensure your Raspberry Pi meets the minimum requirements:

- A Raspberry Pi board with at least 2GB of RAM for acceptable performance. A Raspberry Pi 4 (4GB RAM) or Raspberry Pi 5 is recommended for smoother operation.

- A microSD card with at least 8GB of storage to install necessary software and models.

- A stable internet connection for downloading required packages.

Some users have managed to run AI models even on a Raspberry Pi Zero 2 W (512MB RAM), but performance will be significantly slower.

Installing Ollama on Raspberry Pi

Ollama is one of the easiest ways to run AI models locally on a Raspberry Pi. Before installing it, ensure your Raspberry Pi is properly set up and running the latest updates.

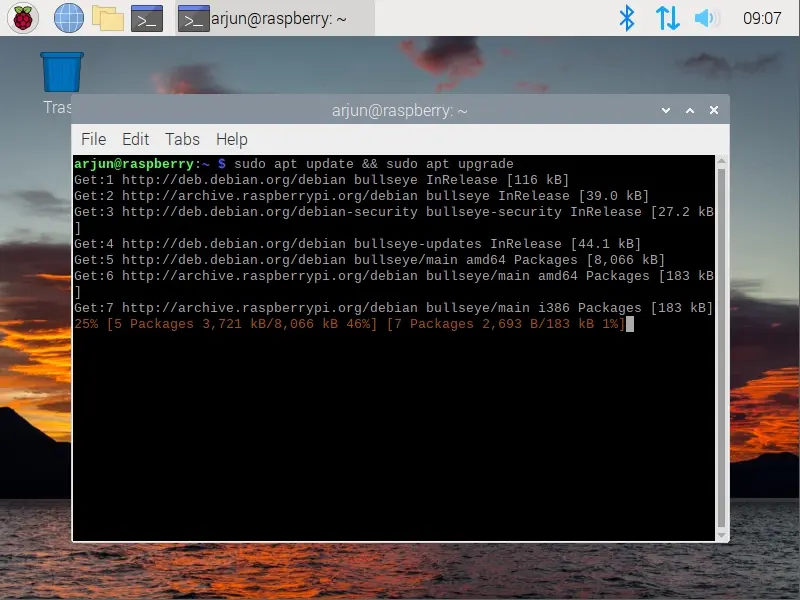

Step 1: Update Raspberry Pi Packages

First, open the Terminal and update all existing packages:

sudo apt update && sudo apt upgrade -y

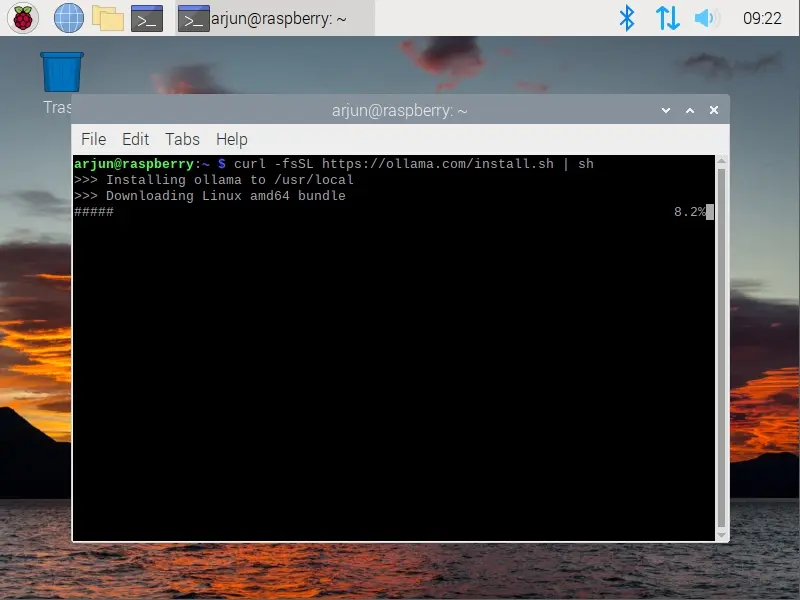

Step 2: Install Ollama on Raspberry Pi

Next, install Ollama using the following command:

curl -fsSL https://ollama.com/install.sh | sh

Once installed, Ollama will notify you that the CPU will be used for running AI models locally.

Running AI Models on Raspberry Pi Locally

Now that Ollama is installed, you can start deploying AI models on your Raspberry Pi. Due to hardware limitations, we’ll focus on smaller models that work efficiently.

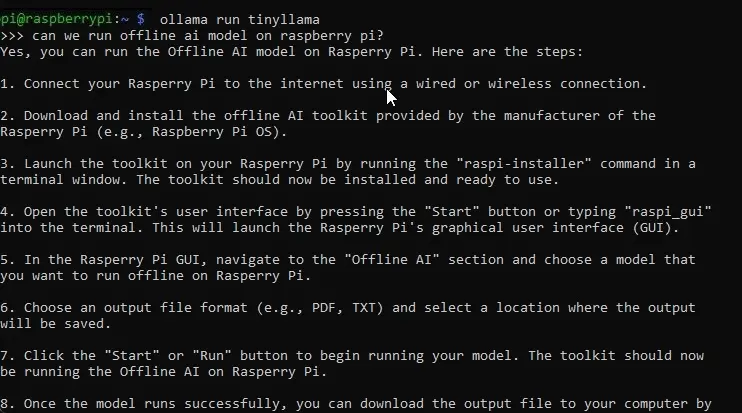

Step 1: Running TinyLlama (1.1B Parameters)

TinyLlama is a compact 1.1 billion-parameter AI model optimized for low-power devices. It uses only 638MB of RAM.

To install and run it, use:

ollama run tinyllama

Once the model is loaded, type your input and press Enter. The response time will be slow, but it works!

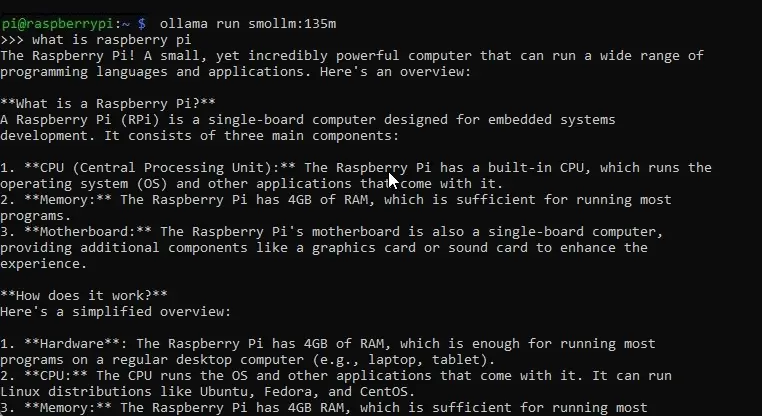

Step 2: Running Smollm (135M Parameters) – Best for Raspberry Pi

For an even lighter model, Smollm has just 135 million parameters and consumes only 92MB of RAM, making it perfect for Raspberry Pi.

Run it with:

ollama run smollm:135m

Performance with Smollm is significantly better, with faster response times and minimal memory usage.

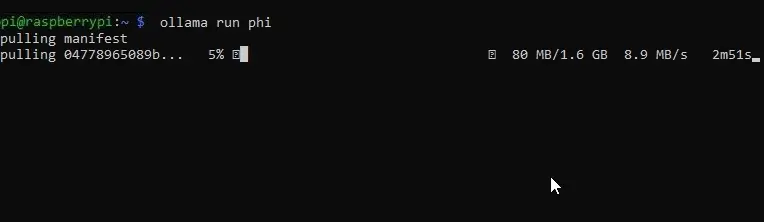

Step 3: Running Microsoft’s Phi (2.7B Parameters) on Raspberry Pi 5

If you’re using a Raspberry Pi 5 (4GB+ RAM), you can try Microsoft’s Phi model, which has 2.7 billion parameters and uses about 1.6GB of RAM.

Run it using:

ollama run phi

While it will take longer to generate responses, the quality will be higher compared to smaller models.

Final Thoughts: AI on Raspberry Pi

Running AI models on Raspberry Pi locally is now easier than ever, thanks to Ollama. While Raspberry Pi’s limited hardware restricts the use of larger AI models, TinyLlama, Smollm, and Microsoft’s Phi offer viable options for low-power AI computing.

For advanced users, alternatives like Llama.cpp provide more flexibility but require additional setup. If you’re interested in more Raspberry Pi projects, check out our guide on how to turn your Raspberry Pi into a wireless Android Auto dongle.

Have any questions or want to share your experience? Let us know in the comments!