The DeepSeek R1 model has made a significant impact on the AI industry, surpassing ChatGPT and securing the top position on the US App Store. However, while DeepSeek R1 is accessible for free on its official website, privacy concerns regarding data storage in China have led many users to seek local deployment options. Fortunately, you can run DeepSeek R1 locally on your Windows, macOS, or Linux PC, as well as Android and iPhone devices, using LM Studio, Ollama, and Open WebUI. This step-by-step guide will walk you through the entire process.

System Requirements for Running DeepSeek R1 Locally

Before you begin, ensure your device meets the minimum requirements:

- PC, Mac, or Linux: At least 8GB of RAM is recommended. With 8GB of memory, you can run the DeepSeek R1 1.5B model at approximately 13 tokens per second. Higher-end models (7B, 14B, 32B, and 70B) require more RAM, a fast CPU, and a high-end GPU.

- Android & iPhone: At least 6GB of RAM is required for smooth operation. High-end devices with Snapdragon 8-series or Apple’s A-series chips will perform better.

How to Run DeepSeek R1 Locally on PC Using LM Studio

LM Studio is a user-friendly tool that simplifies running AI models locally. Here’s how to use it:

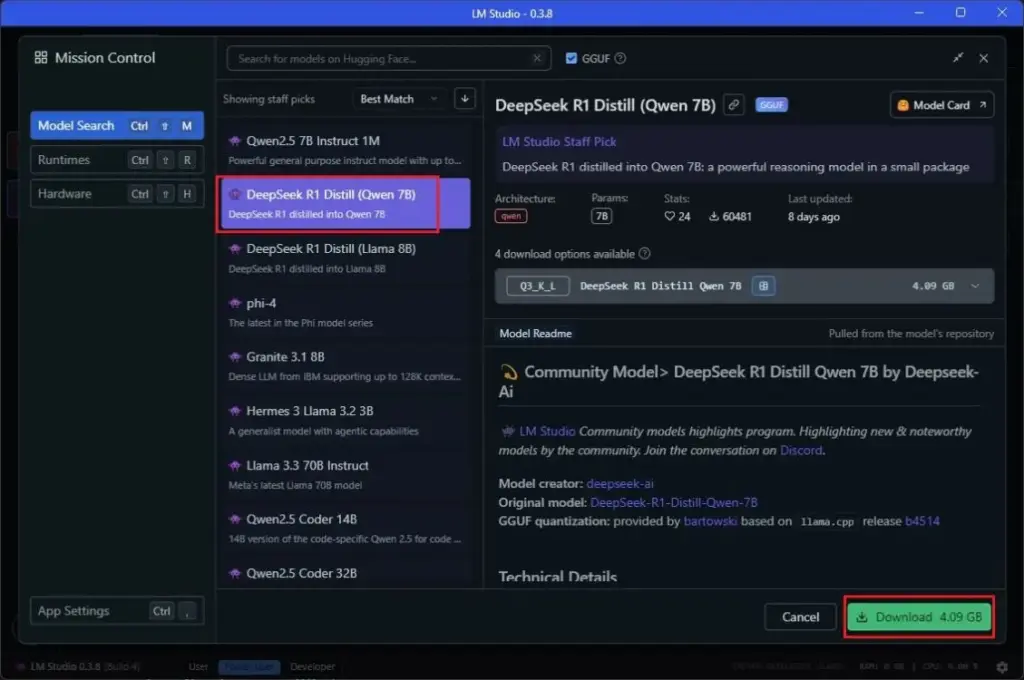

- Download and install LM Studio (version 0.3.8 or later) on your PC, Mac, or Linux system.

- Launch LM Studio and navigate to the search window in the left panel.

- Under Model Search, look for “DeepSeek R1 Distill (Qwen 7B)” (available on Hugging Face).

- Download the model (requires at least 5GB of storage and 8GB of RAM).

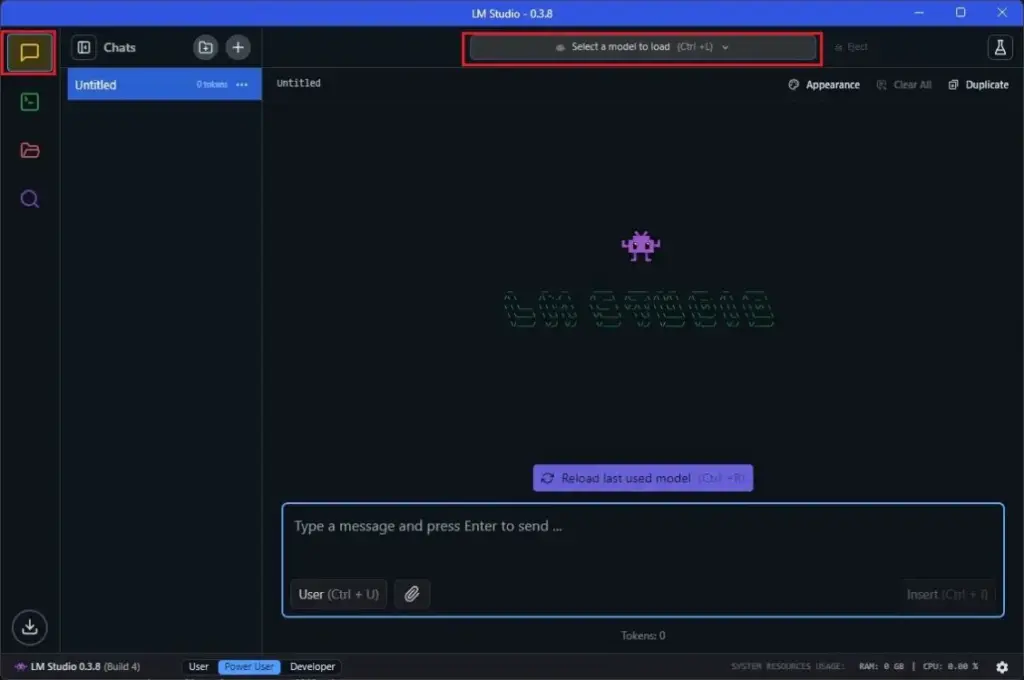

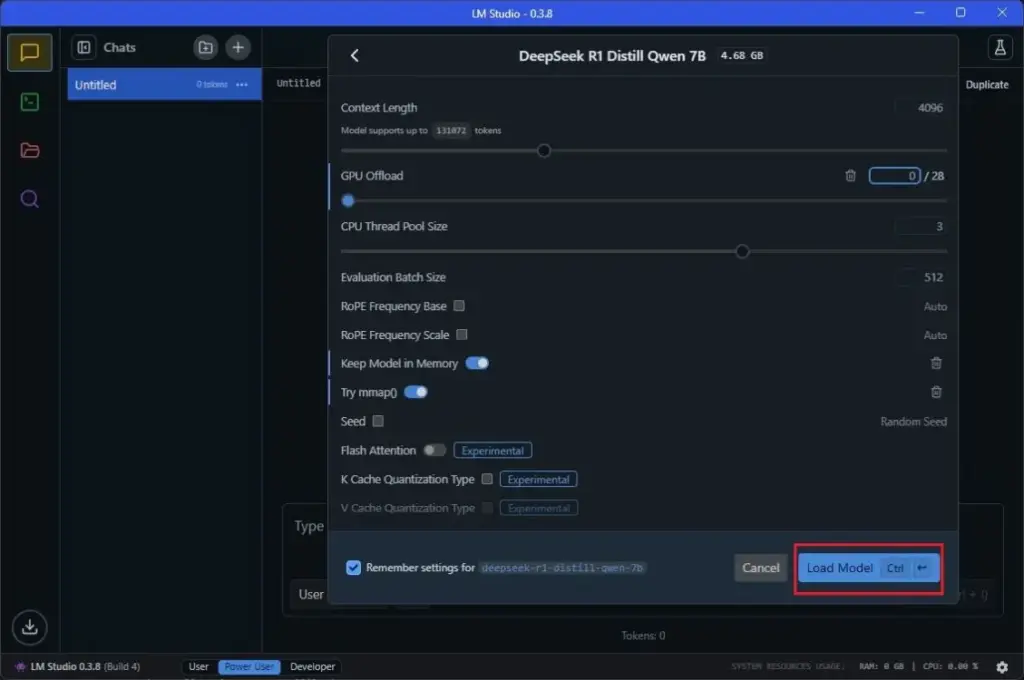

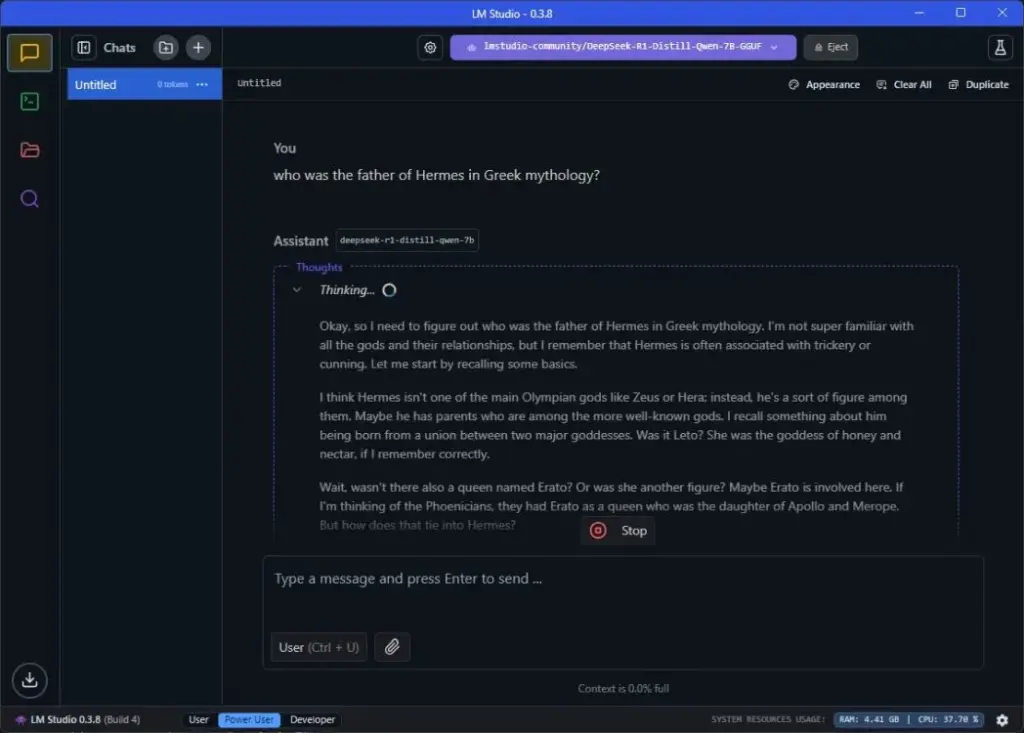

- Switch to the Chat window and load the model.

- Click on “Load Model”. If you encounter errors, reduce GPU offload to 0 and try again.

- Now, you can chat with DeepSeek R1 locally on your computer!

How to Run DeepSeek R1 Locally on PC Using Ollama

Ollama provides a command-line interface to run AI models efficiently.

- Install Ollama (free) on Windows, macOS, or Linux.

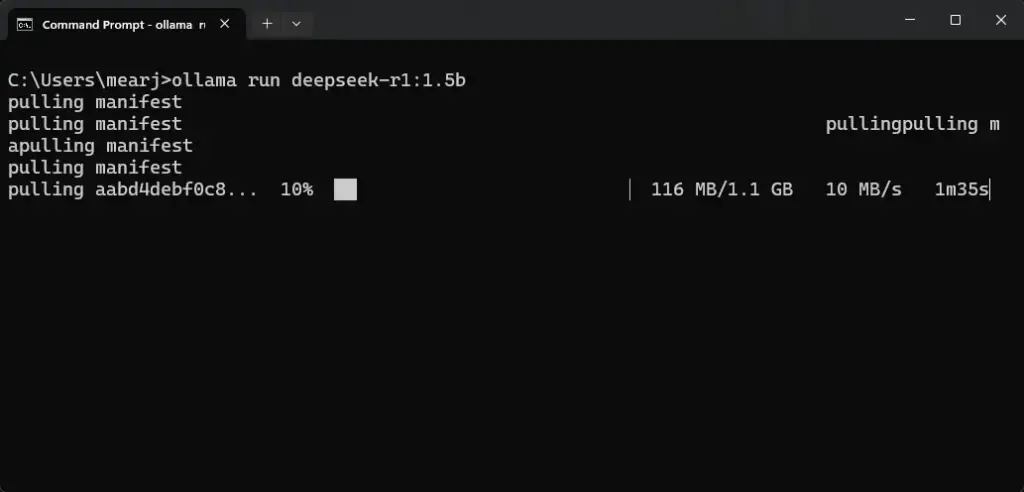

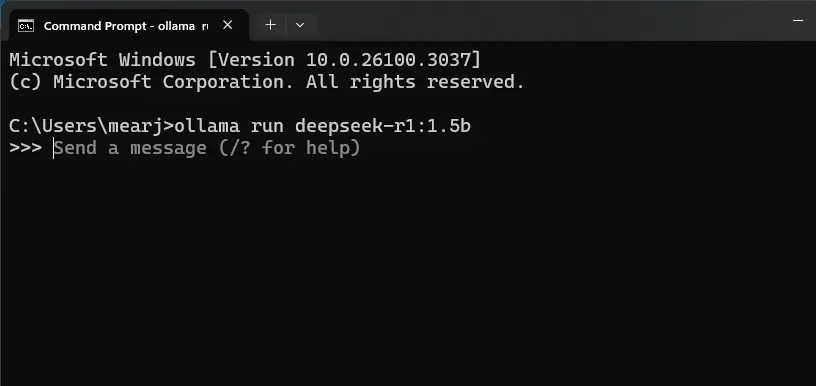

- Open Terminal (or Command Prompt) and run the following command to load the DeepSeek R1 1.5B model:

ollama run deepseek-r1:1.5b

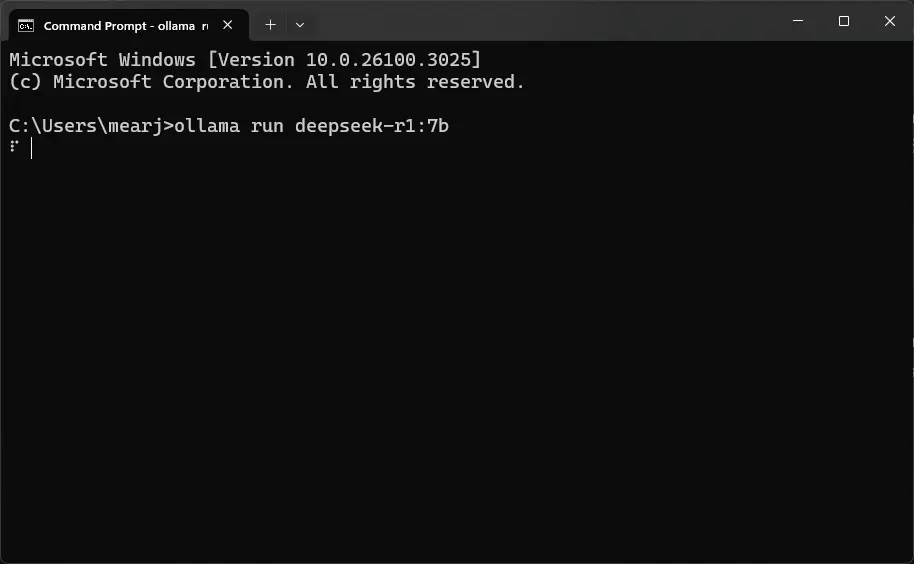

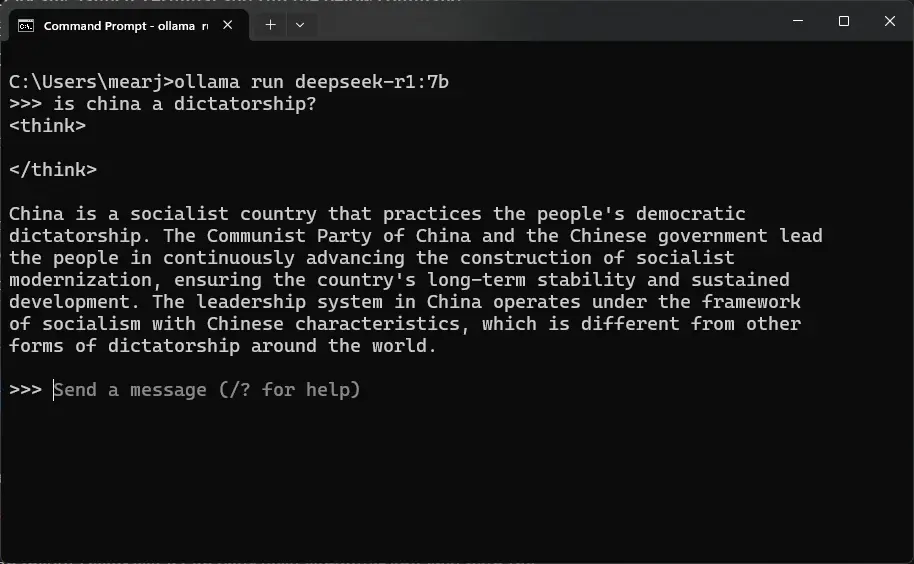

- If you have a high-performance PC, try running the 7B model, which requires 4.7GB of memory:

ollama run deepseek-r1:7b

- Start chatting with DeepSeek R1 locally.

- To exit the session, use the Ctrl + D shortcut.

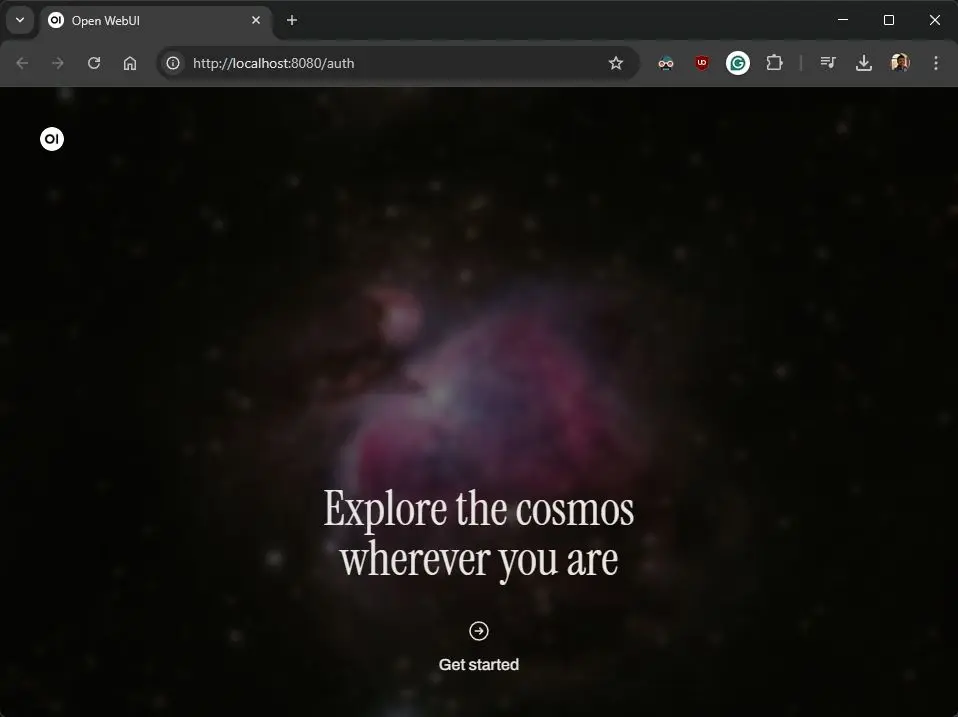

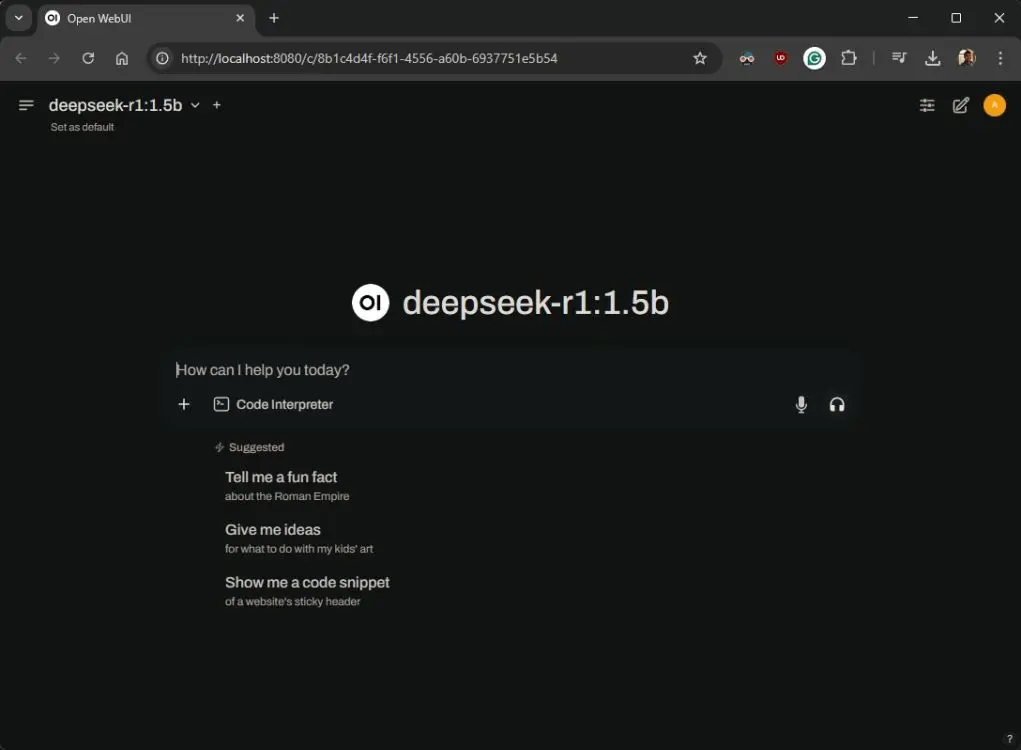

How to Run DeepSeek R1 Locally Using Open WebUI

For a ChatGPT-like interface, you can install Open WebUI, which integrates with Ollama.

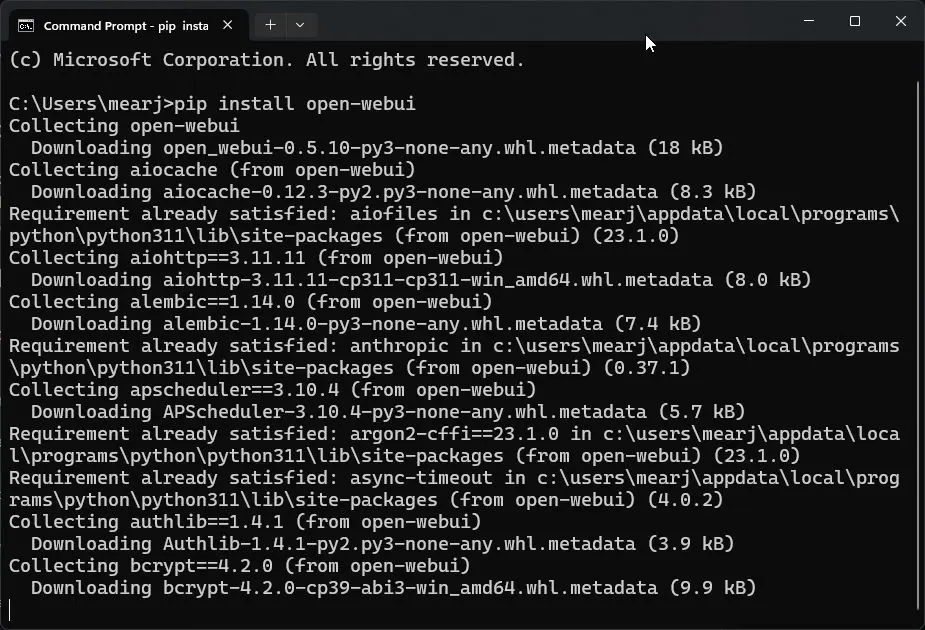

- Set up Python and Pip on your computer.

- Open Terminal (or Command Prompt) and install Open WebUI:

pip install open-webui

- Start the DeepSeek R1 model via Ollama:

ollama run deepseek-r1:1.5b

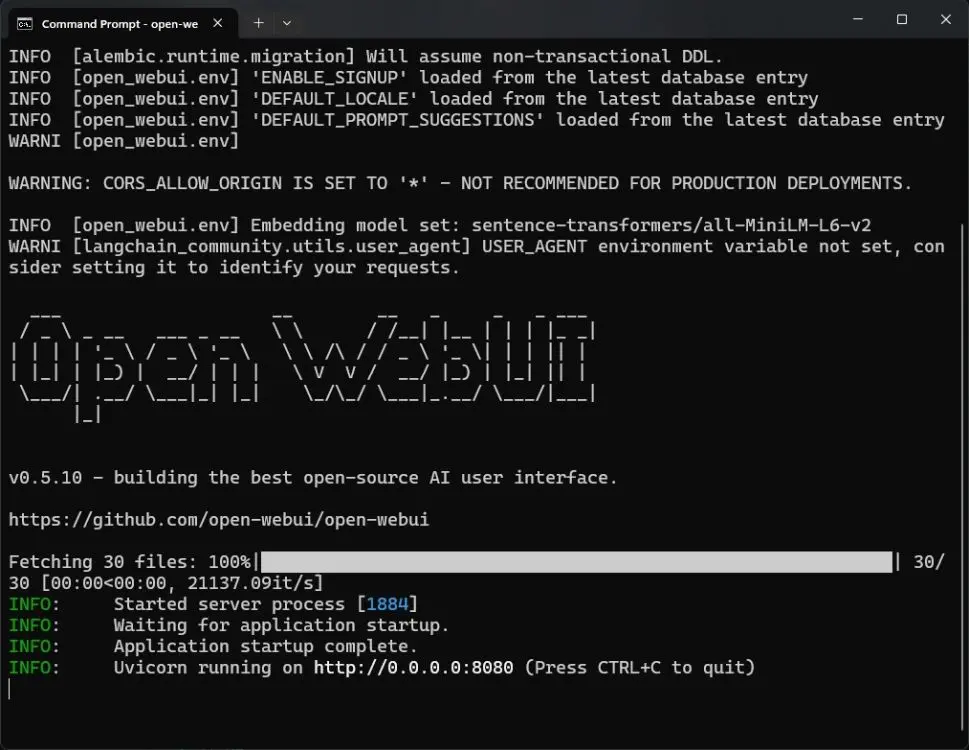

- After running Ollama, start Open WebUI:

open-webui serve

- Open http://localhost:8080 in your browser.

- Click “Get started” and enter your name.

- Open WebUI will automatically detect DeepSeek R1 as the default model.

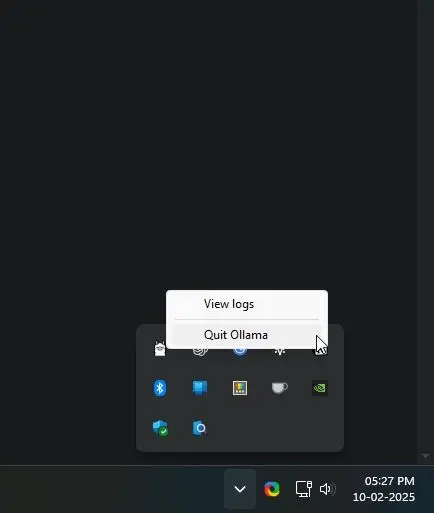

- To stop, right-click on Ollama in the system tray and quit the application.

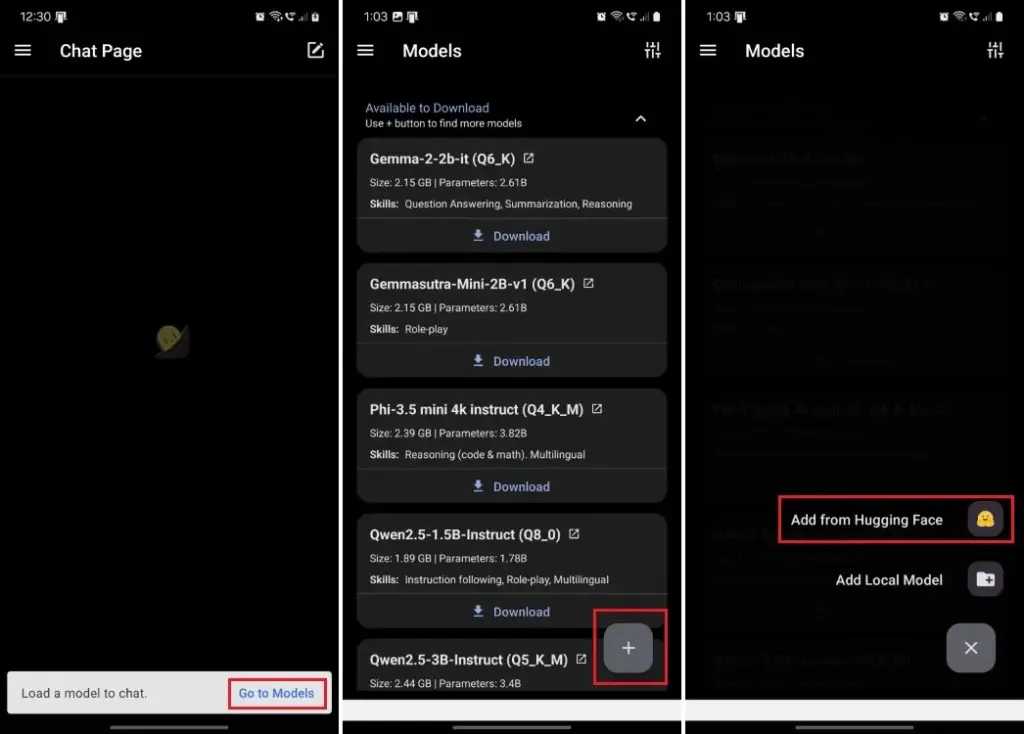

How to Run DeepSeek R1 Locally on Android & iPhone

To run DeepSeek R1 locally on mobile devices, PocketPal AI is the best option.

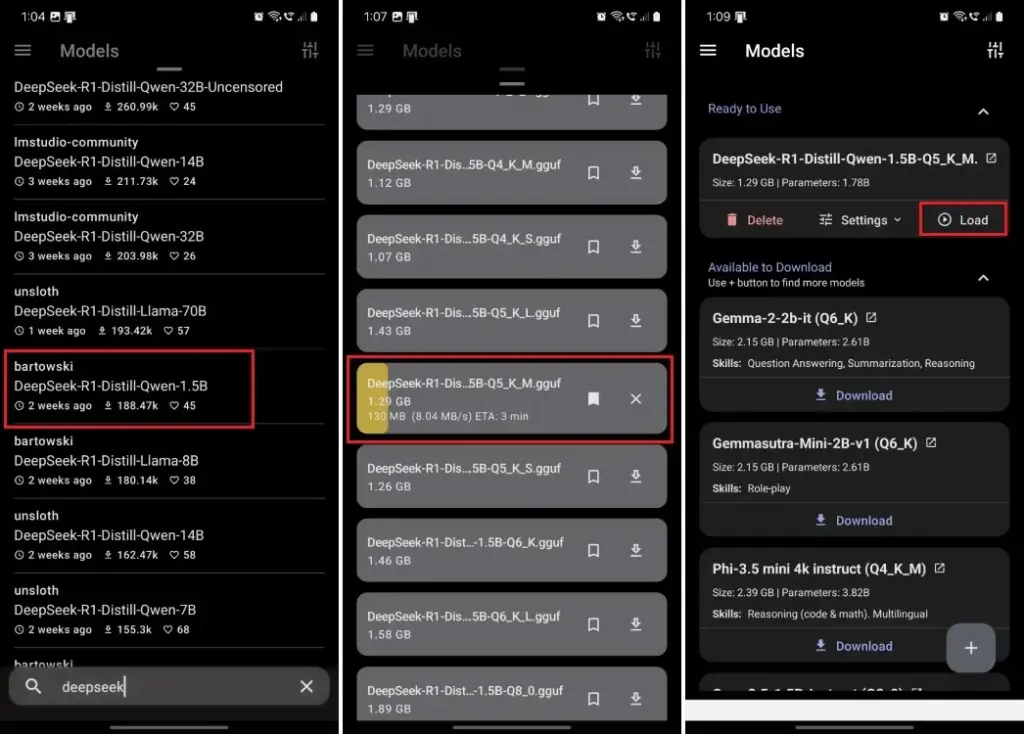

- Install PocketPal AI from the Play Store (Android) or App Store (iPhone).

- Open the app and tap “Go to Models”.

- Tap the “+” button at the bottom right and select “Add from Hugging Face”.

- Search for “DeepSeek-R1-Distill-Qwen-1.5B” by bartowski.

- Download the suitable quantized model based on available RAM (Q5_K_M requires ~1.3GB RAM).

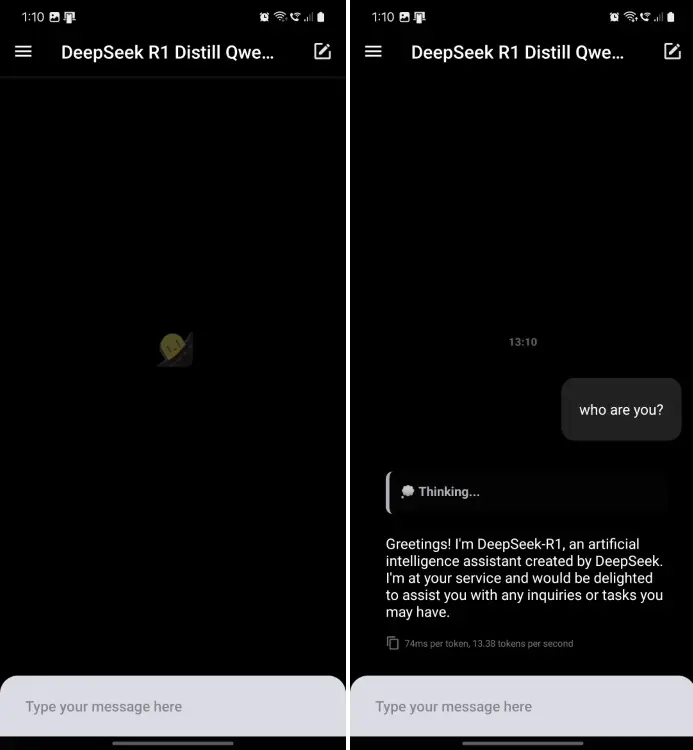

- Go back and tap “Load” to start chatting with DeepSeek R1 locally.

- Now, you can locally chat with the DeepSeek R1 model on your Android phone or iPhone.

Final Thoughts

These methods allow you to run DeepSeek R1 locally on your PC, Mac, Linux, Android, or iPhone without an internet connection, ensuring better privacy. While the 1.5B and 7B models may produce hallucinations or inaccuracies, the 32B model offers better coding and reasoning capabilities if you have powerful hardware.

Now you can enjoy AI-powered conversations without relying on online servers! 🚀