Quick Summary

- Unified Interface: New API aggregates diverse LLM protocols (OpenAI, Claude, Gemini) into a single standard OpenAI-compatible format.

- Enterprise Control: It provides a self-hosted management console for API keys, user quotas, billing, and channel routing.

- Cost Optimization: Features include cache-aware billing, weighted random routing for load balancing, and support for cheaper model alternatives.

- Open Source: The project is fully open source and designed for self-hosting via Docker, ensuring data privacy and infrastructure control.

In 2026, the primary bottleneck in AI development has shifted from model selection to infrastructure management. The question is no longer just “which model is best,” but rather “how do we manage all of them efficiently?”

Every scalable AI stack now integrates multiple providers: OpenAI for reasoning, Anthropic for coding, Google for context windows, and various specialized endpoints for reranking or audio. Each provider mandates its own SDK, authentication protocols, rate limits, and usage dashboards. This fragmentation results in significant technical debt:

- Multiple API keys scattered across development teams.

- Redundant integration logic rewritten for every new provider.

- Fragile codebases that break when upstream APIs update.

- A lack of centralized observability regarding costs and user access.

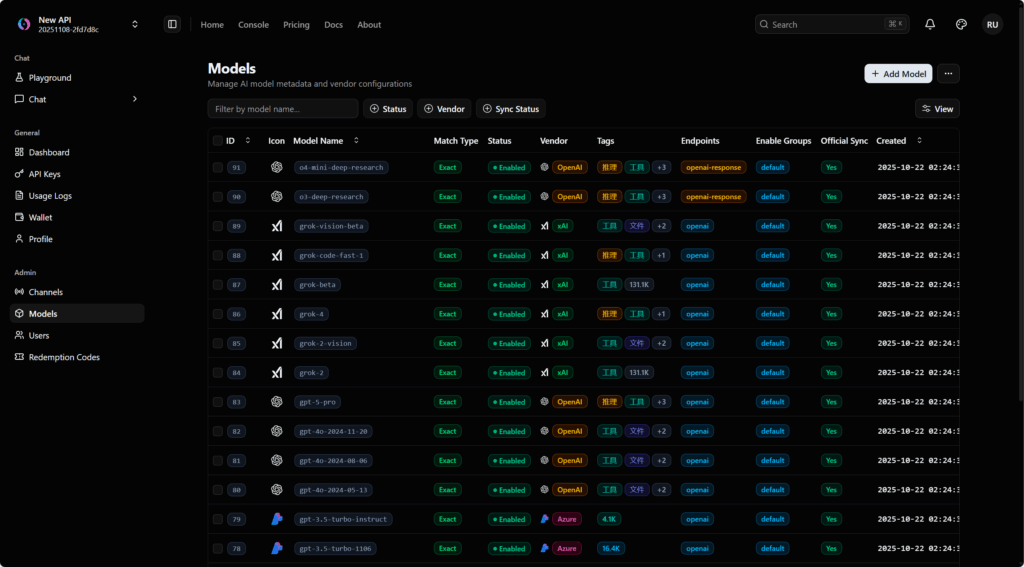

New API addresses this fragmentation as a next-generation LLM Gateway and asset management system. It aggregates models from major providers into a single, unified API format, accompanied by a robust web console for managing keys, quotas, and access channels.

This review analyzes the technical architecture of New API, its core features, and deployment strategies for enterprise environments.

What Is New API?

New API is an open-source AI model hub and API gateway that standardizes various Large Language Model (LLM) protocols into a single interface. It serves as a middleware layer between your applications and upstream providers like OpenAI, Anthropic, and Google.

Designed for both individual developers and enterprise teams, it functions to:

- Translate Protocols: Convert distinct API formats (e.g., Claude Messages, Gemini Chat) into a standard OpenAI-style interface.

- Aggregate Providers: Connect to OpenAI, Claude, Gemini, DeepSeek, Midjourney-Proxy, Suno, and more.

- Manage Assets: Provide a dashboard for managing channels, users, quotas, and billing.

- Self-Host: Run completely within your own infrastructure via Docker.

The project is available on GitHub and is a significant extension of the One API project, featuring a modernized UI and support for newer capabilities like OpenAI Realtime.

Source Code & Documentation:

- GitHub: https://github.com/QuantumNous/new-api

- Official Site: https://www.newapi.ai

The API Fragmentation Problem

API Fragmentation refers to the complexity introduced when integrating multiple services that perform similar functions but use different communication standards. New API resolves several specific pain points caused by this fragmentation:

- Inconsistent Request Formats: OpenAI uses Chat Completions; Claude uses Messages; Gemini uses its own structure. Supporting all three typically requires maintaining three separate integration layers.

- Credential Sprawl: Managing specific keys for every project and environment increases security risks. Revoking a key across multiple repositories is difficult and error-prone.

- Lack of Centralized Observability: Usage data is siloed within each provider’s dashboard. There is no native global view of how much a specific team or product feature is costing the organization.

- Vendor Lock-in: Hard-coding logic for a specific provider makes migration expensive. If a team builds exclusively for OpenAI, switching to Claude requires a significant code refactor.

New API positions itself as a Central AI Control Plane. Applications integrate with one consistent API, while the gateway handles the complex multi-cloud plumbing in the background.

Key Features of New API

1. Unified Protocol and Translation

New API functions primarily as a protocol translator, allowing developers to standardize on a single SDK.

It natively supports and translates:

- OpenAI Chat Completions & Responses

- OpenAI Realtime (including Azure channels)

- Claude Messages

- Google Gemini Chat

- Rerank APIs (Cohere & Jina)

Protocol Translation is the capability to accept a request in one format and execute it on a different provider’s backend. For example:

- OpenAI → Claude: You send an OpenAI-style request; New API converts it to the Claude Messages format, calls Anthropic, and converts the response back.

- Claude → OpenAI: You use Claude Code or SDKs to call third-party models.

- OpenAI → Gemini: Standardize Gemini interactions using the OpenAI schema.

This allows engineering teams to A/B test models from different vendors by simply changing a configuration string, rather than rewriting application code.

2. Upstream Model Aggregation

The platform acts as a comprehensive AI Model Hub, supporting a vast array of upstream channels.

| Category | Supported Providers |

|---|---|

| Text & Reasoning | OpenAI (GPT-4, o-series), Azure OpenAI, Anthropic Claude, Google Gemini, DeepSeek, Dify |

| Image Generation | Midjourney-Proxy (Plus) |

| Audio & Music | Suno API |

| Reranking | Cohere, Jina |

| Custom | Arbitrary HTTP endpoints |

This aggregation capability is ideal for consolidating all generative AI needs—text, image, and audio—under a single endpoint. It allows for dynamic traffic routing based on cost, latency, or provider reliability.

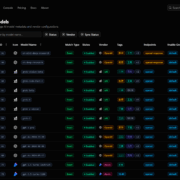

3. Enterprise LLM Management

New API includes a sophisticated management layer designed for organizational control over AI assets.

Key administrative features include:

- Modern Dashboard: A UI focused on data visualization and real-time usage statistics.

- Token & User Grouping: Administrators can restrict specific models to certain user groups and apply rate limiting per user.

- Advanced Billing:

- Support for online recharge (Stripe, etc.).

- Per-request charging models.

- Cache-Aware Billing: Configure multipliers to bill cache hits at a lower rate than fresh calls.

- Traffic Routing: Use weighted random algorithms to distribute loads across multiple channels, optimizing for cost and stability.

- Reliability: Configurable retry logic on channel failure and built-in support for Redis caching.

PRO TIP:

Use the Channel Weighting feature to implement a cost-saving strategy. Route 80% of your generic traffic to a lower-cost model (like DeepSeek or a localized Llama 3) and only 20% to premium models (like GPT-4) for complex reasoning tasks. This happens transparently to the end-user.

4. Self-Hosted Infrastructure

New API is designed for Self-Hosting, making it suitable for privacy-sensitive workloads where data control is paramount.

- Database Support: SQLite (default), MySQL (≥ 5.7.8), or PostgreSQL (≥ 9.6).

- Caching: Integration with Redis for session management and response caching.

- Deployment: Fully containerized with Docker support.

This architecture ensures that API keys and usage data remain within your controlled environment, rather than being exposed to third-party SaaS management tools.

Technical Benefits for Development Teams

Single Code Path Integration

Integrating New API allows developers to maintain a single code path regardless of the underlying model.

- Without New API: The backend requires imports for the OpenAI SDK, Anthropic SDK, and Google SDK, each with unique error handling and streaming implementation.

- With New API: The backend integrates once against the Unified API entry point. Model switching is handled via configuration, reducing the testing surface area and regression risks.

Centralized Governance

For platform engineering teams, New API simplifies governance.

- Key Management: Add or rotate upstream API keys in one location without redeploying applications.

- Separation of Concerns: The infrastructure team manages provider relationships and quotas, while product teams simply consume the unified endpoint.

- Compliance: Easier auditing of logs and error traces from a central source.

Quick Start Guide: Deploying New API

This guide outlines the deployment of New API using Docker, the recommended method for most environments. Please consult the official documentation for the most up-to-date instructions.

Option 1: Docker Compose (Recommended)

- Clone the repository:

git clone https://github.com/QuantumNous/new-api.git && cd new-api- Configure

docker-compose.yml:

Set your preferred database. For production, switch from SQLite to MySQL or PostgreSQL. Configure environment variables such asSESSION_SECRETandSQL_DSN. - Start the stack:

docker compose up -d- Access the Dashboard:

Navigate to the port defined in your configuration (default3000). Log in with default credentials (usually found in the logs or docs) and begin configuring your channels.

Option 2: Direct Docker Run

For a standalone deployment without Compose, use the following commands.

Using SQLite (Local Data):

docker run --name new-api -d --restart always \

-p 3000:3000 \

-e TZ=Asia/Shanghai \

-v /home/ubuntu/data/new-api:/data \

calciumion/new-api:latestUsing MySQL:

docker run --name new-api -d --restart always \

-p 3000:3000 \

-e SQL_DSN="root:123456@tcp(localhost:3306)/oneapi" \

-e TZ=Asia/Shanghai \

-v /home/ubuntu/data/new-api:/data \

calciumion/new-api:latestNote: Adjust paths, timezones, and credentials to match your specific environment.

PRO TIP:

If you are running multiple instances of New API for high availability, ensure they share the same Redis instance and

CRYPTO_SECRETenvironment variable. This ensures that user sessions and cached responses are consistent across all nodes.

Practical Use Cases

Cost Optimization via Routing

By leveraging channel weighting and cache-aware billing, organizations can significantly reduce API spend. You can configure the system to route traffic based on logic—for example, sending high-volume, low-complexity queries to cheaper providers while reserving premium models for complex tasks. Additionally, billing cache hits at a reduced rate encourages efficient system usage.

Internal AI Platform

Large organizations can use New API as the backbone of an internal AI platform. IT teams can issue internal tokens with specific quotas and model access rules. For instance, the marketing team might have access to GPT-4 and Midjourney, while the internal tooling team is restricted to efficient coding models.

SaaS Monetization

For SaaS products integrating AI features, New API acts as a billing middleware. It supports online recharge and per-request pricing, allowing you to align your customer billing directly with your underlying infrastructure costs.

Frequently Asked Questions

1. What are the differences between New API and One API?

New API is an enhanced fork of One API. It preserves the core architecture while adding a more modern user interface (UI), support for additional providers (such as Midjourney-Proxy and Suno), and more flexible billing and top-up features tailored for enterprise environments.

2. Can I use New API for commercial purposes?

Yes. New API is open source. You can self-host it and use it as a gateway for your SaaS product. The system supports payment integrations (Stripe, etc.) and user quota management, making it well-suited for running an AI service business.

3. Does New API support streaming (real-time responses)?

Yes. New API fully supports streaming mode for models such as OpenAI and Claude. It works as a transparent proxy, forwarding streaming data from the provider to the client without disrupting the user experience.