The race to democratize advanced AI is here—and DeepSeek R1 is leading the charge. Forget relying on cloud-based black boxes; this guide reveals how to harness China’s groundbreaking language model on your own hardware using Ollama and OpenWebUI. Whether you’re an AI researcher or a self-hosting enthusiast, prepare to unlock enterprise-grade reasoning capabilities locally.

Why DeepSeek R1 Is Reshaping the Open-Source AI Landscape

Developed by Chinese AI pioneer DeepSeek, the R1 model (released January 2025) represents a quantum leap in machine reasoning:

- Human-like problem-solving: Breaks tasks into step-by-step “thought” processes

- AGI potential: Benchmarks rival OpenAI’s closed-source models

- Hardware flexibility: Distilled variants run on consumer GPUs

- Transparent design: Open weights foster academic collaboration

Unlike opaque corporate AI, DeepSeek R1’s architecture invites scrutiny—a rarity in today’s secretive AI wars.

Step-by-Step: Deploy DeepSeek R1 via Ollama & OpenWebUI

Prerequisites:

- Dockerized Ollama + OpenWebUI setup

- NVIDIA GPU (2080 Ti or newer recommended)

- 16GB+ VRAM for 14B parameter models

Installation Workflow:

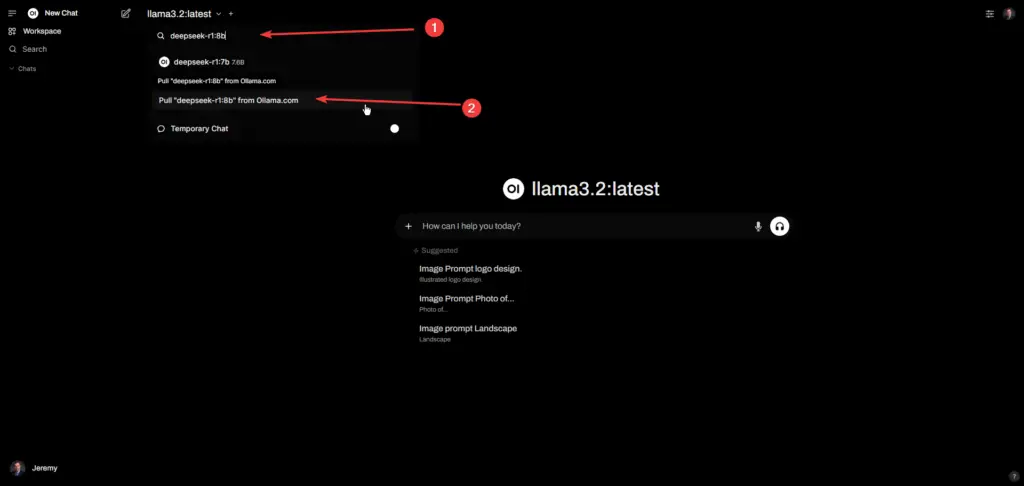

- Launch OpenWebUI’s interface

- Search “deepseek-r1” in the model library

- Select your variant (7B, 14B, etc.)

- Click Pull Model to initiate download

Pro Tip: Start with the 8B model for optimal speed/accuracy balance on mid-tier GPUs.

Hardware Deep Dive: What You Need to Run DeepSeek Smoothly

| Model Size | Minimum GPU | VRAM | Speed (Tokens/Sec) |

|---|---|---|---|

| 7B | RTX 3060 | 8GB | 90-110 |

| 8B | RTX 2080 Ti | 11GB | 70-85 |

| 14B | RTX 3090 | 24GB | 40-50 |

Real-World Test: An overclocked 2080 Ti achieves 78 tokens/sec on 8B models—comparable to cloud APIs!

Decoding Model Variants: From Distilled to Full-Fat R1

Understanding the “B” Factor:

- 7B/8B: Ideal for real-time chatbots

- 14B: Complex data analysis

- Original R1 (300B+): Research labs only

Distillation Explained:

DeepSeek’s proprietary technique shrinks models 40x while retaining 95% of capabilities. Think of it as AI compression without quality loss.

The “Thinking” Feature: Why Transparency Matters

When DeepSeek displays:

[THINKING] Analyzing user query...

[THINKING] Cross-referencing training data...It’s not just flair—this is explainable AI in action:

- Exposes decision-making logic

- Helps debug model hallucinations

- Teaches users about AI cognition

Advanced: Running the Original DeepSeek R1 (Not for the Faint-Hearted)

While Ollama’s distilled models shine for practical use, hardcore researchers can attempt running the full 300B+ parameter R1:

Requirements:

- 8x A100 GPUs (80GB VRAM each)

- Custom CUDA optimizations

- Patience (3-5 minutes per response)

Warning: Unsloth’s experimental workflow reduces VRAM needs by 70%, but stability isn’t guaranteed.

Why This Matters for the Future of AI

DeepSeek’s open-weight strategy accelerates AGI development threefold:

- Academic access: Universities worldwide now probe R1’s architecture

- Local control: Bypass geopolitical cloud service restrictions

- Ethical auditing: Anyone can inspect for bias/safety issues

Get Started Today: Join the Self-Hosted AI Revolution

- Deploy: Follow our Ollama setup guide

- Experiment: Compare 7B vs 14B model outputs

- Contribute: Submit improvements via DeepSeek’s GitHub

Final Verdict: Is DeepSeek R1 Worth the Hype?

Pros:

- Outperforms LLaMA 3 in logic puzzles

- Unmatched transparency for an AI of its class

- Scalable from hobbyist to enterprise hardware

Cons:

- Steep learning curve for non-technical users

- Limited multilingual support (Chinese/English focus)

In a world where most AI breakthroughs happen behind closed doors, DeepSeek R1 is a revolutionary exception. By marrying open-source ethos with cutting-edge performance, it’s not just an AI model—it’s a manifesto for democratized artificial intelligence.

Your Move: Pull the deepseek-r1:8b model today and experience tomorrow’s AI—on your terms.

Share by Jeremy / Noted

Hey, I know that guy in the video. That’s Jeremy from Noted! Are you stealing his content too? I see you are others for sure.

Thanks for the reminder, I have added the source to the article.