The landscape of AI coding agents is evolving at an incredible pace, with new tools and platforms emerging constantly. From open-source options to paid subscriptions, and everything in between, it can feel like a dizzying race just to keep up. As a developer, I’ve often found myself grappling with a common challenge: how do you integrate these diverse AI tools, each with its own CLI and unique strengths, into a cohesive and efficient workflow, especially when budget is a concern?

The fragmented nature of these tools often leads to a disconnected user experience. Switching between different CLIs not only disrupts focus but also makes it difficult to leverage the specific advantages of each AI model. Many free resources, like generous daily request limits from Gemini or specific models, often go underutilized because they’re not seamlessly integrated into a developer’s daily routine.

This challenge led me to explore a unified approach that allows me to harness the power of multiple AI models—including those with free tiers—within a single, consistent framework. The goal? To streamline my development process, optimize costs, and unlock the specialized capabilities of each AI. After considerable experimentation, I’ve discovered a strategy that’s not only practical but surprisingly powerful, particularly for personal projects where data privacy isn’t a primary concern.

This approach transforms individual AI models and their CLIs into a specialized “deck of cards.” Depending on the task at hand—be it code analysis, implementation, reasoning, or context gathering—I can strategically deploy the right AI agent for the job, at the right time, and for the right purpose. This method is incredibly cost-effective, leveraging free resources and reducing reliance on expensive, all-encompassing models for every task.

The Power of Orchestration: Consolidating AI Coding Agents

At the heart of this strategy lies a powerful orchestration tool: OpenCode. Think of OpenCode as the central hub that allows you to manage and direct multiple AI sub-agents. While similar tools exist (like Clockwork Code), OpenCode stands out for its open-source nature, rapid development, and remarkable flexibility in integrating various AI providers and models.

How OpenCode facilitates AI agent workflow

OpenCode operates with a system of main agents and sub-agents. The main agent defines the overall task, but it doesn’t necessarily perform all the heavy lifting itself. Instead, it delegates specific responsibilities to specialized sub-agents. These sub-agents, in turn, can call upon external tools and commands, including other CLIs.

The genius here is that most AI coding agents, particularly those with a CLI, can be invoked through standard shell commands. This means an OpenCode agent can effectively “call” another AI CLI tool, assigning it a specific task and receiving its output.

Building specialized sub-agents

The core idea is to create distinct sub-agents within OpenCode, each configured to interact with a specific AI CLI or API, thereby leveraging its unique strengths or free resource tiers. For instance, one sub-agent might be tasked with deep code analysis using a free, high-context model, while another handles rapid code generation with a different, faster model. This modularity allows you to:

- Optimize resource usage: Direct complex, context-heavy tasks to models offering large context windows (often free or lower cost) and delegate implementation to models optimized for speed.

- Reduce token consumption: By offloading initial code base understanding or extensive documentation review to a free resource, your main, potentially more expensive, OpenCode agent (e.g., running on Claude Sonnet) consumes fewer tokens.

- Leverage specialized capabilities: Some models excel at security analysis, others at reasoning, and still others at raw code output. This setup allows you to pick the best tool for each specific sub-task.

Let’s dive into the practical implementation of this two-pronged approach.

Method 1: Harnessing Free CLI Tools and Their Resources

This approach focuses on integrating standalone AI coding agent CLIs that offer free usage tiers or daily request limits. You’ll set them up as distinct sub-agents within OpenCode.

Integrating Gemini CLI for deep code exploration

My first step typically involves setting up the Gemini CLI. Gemini often provides generous free tiers, including a large context window (e.g., 1 million tokens with Gemini 1.5 Pro). This makes it an excellent candidate for tasks requiring extensive code base analysis or understanding complex project structures.

Setup steps:

- Install Gemini CLI: You’ll need to install the

geminiCLI tool. (Specific installation instructions can be found on Google AI’s documentation.) - Authenticate with Google: Authenticate the CLI with your Google account. This usually involves selecting your desired Gmail account, which grants you access to Gemini’s free resources.

- Create a Gemini sub-agent in OpenCode: Within your OpenCode configuration (often a

config/opencoderc.jsonfile), define a sub-agent specifically for Gemini.

// Example (simplified) structure within OpenCode config

{

"agents": [

{

"name": "gemini_explorer",

"description": "A sub-agent for deep code exploration and understanding, leveraging Gemini's large context window.",

"model": "openai/gpt-4-turbo-preview", // This is the OpenCode orchestrator's model

"tools": ["bash"],

"actions": [

{

"name": "ask_gemini",

"description": "Ask Gemini a question about the project or codebase.",

"command": "gemini ask --prompt \"{{prompt}}\""

}

]

}

]

}NOTE:

The model field in the OpenCode agent definition refers to the LLM that the OpenCode orchestrator itself uses to decide when to call this sub-agent. The ask_gemini command is what actually invokes the gemini CLI.In the description for your Gemini agent, you’ll highlight its strength, such as its massive context window. This helps the main OpenCode agent understand when to delegate tasks like “Analyze the entire codebase for architectural patterns” or “Find security vulnerabilities in this module.”

When the main OpenCode agent receives a task like “Tell me about this project,” it will delegate this to the gemini_explorer sub-agent. This sub-agent then executes the gemini ask command with the appropriate prompt, and Gemini processes the request, returning its insights to OpenCode. This significantly reduces the token cost for your main OpenCode agent, which might be running on a more expensive model like Claude Sonnet.

Integrating other specialized CLIs

Beyond Gemini, I’ve extended this approach to other AI coding agents with unique strengths:

- Quen Coder (for rapid implementation): Many providers offer free daily requests for code-focused models. Quen Coder, for example, might offer 2000 free requests per day, making it ideal for tasks requiring quick code snippets or implementing well-defined functions. I configure a

quencoder_implementersub-agent in OpenCode, ready to be called for implementation tasks. - Codex (via ChatGPT Plus for reasoning): If you have a ChatGPT Plus subscription (which often provides access to powerful models like GPT-4 or even early access to GPT-5 capabilities), you can leverage tools like Codex CLI. I set up a

codex_reasonersub-agent for tasks demanding strong reasoning, decision-making, or complex problem-solving. This agent excels at understanding nuanced prompts and providing insightful architectural advice. - Cody (for context collection): Tools like Cody can build an incredible understanding of your codebase, including recent commits and project structure. I utilize a

cody_context_collectorsub-agent to gather all relevant context before passing it to other agents for specific tasks. This ensures other agents receive highly relevant information without having to spend tokens “learning” the project.

By strategically assigning roles to each sub-agent based on their underlying AI model’s strengths and free availability, you create a highly efficient, cost-optimized, and powerful development environment.

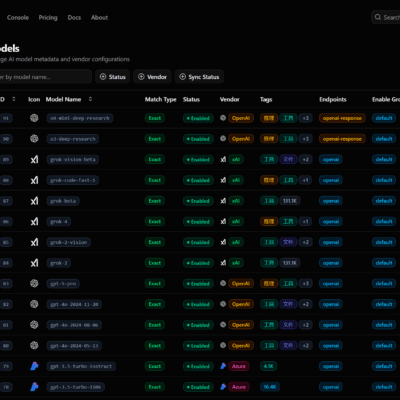

Method 2: Leveraging Free Tiers and APIs from LLM Providers

The second powerful aspect of OpenCode is its native ability to integrate with numerous AI providers directly via API keys. Many providers offer free tiers, trial periods, or promotional credits, which OpenCode can readily tap into.

Direct API integration with OpenCode

OpenCode’s architecture, often based on an SDK like AISDK, allows for seamless integration with a wide array of providers. This means you don’t necessarily need a separate CLI for every service; you can configure OpenCode to use an API key directly.

Example: Cerebras for fast generation

Cerebras, for instance, offers fast inference and often has free usage tiers or promotions. You might be limited to a certain number of requests per day or a specific context window, but these resources are still invaluable when orchestrated correctly.

Setup steps:

- Obtain API Key: Get an API key from the provider (e.g., Cerebras).

- Authenticate OpenCode: Authenticate OpenCode with the provider’s API key. This is usually done through OpenCode’s configuration or a dedicated login command (e.g.,

open-code login --provider cerebras --api-key YOUR_KEY). - Define a sub-agent for Cerebras: Create a

cerebras_modelsub-agent in your OpenCode configuration. You can specify the model name that Cerebras provides for free.

// Example (simplified) structure within OpenCode config

{

"agents": [

{

"name": "cerebras_generator",

"description": "A fast code generation agent leveraging Cerebras's free models for quick implementations.",

"model": "cerebras/freemodelname", // Specify the actual free model provided by Cerebras

"tools": [], // No external CLI needed if using direct API

"actions": [

{

"name": "generate_code",

"description": "Generate code based on the provided prompt using Cerebras.",

"prompt_template": "Generate {{language}} code for: {{task}}"

}

]

}

]

}While OpenCode might allow you to directly select authenticated models from providers, defining a specific sub-agent (like cerebras_generator) gives you more granular control. You can explicitly design its purpose and the types of tasks it should handle, ensuring better delegation from your main OpenCode agent.

Exploring other free providers

The flexibility of OpenCode extends to many other providers:

- Grock: Known for incredibly fast inference, Groq might offer free access to certain models, ideal for real-time interaction or quick code generation.

- Google/Amazon Credits: If you have cloud credits, you can often leverage these to access free tiers of AI models from Google Cloud (Vertex AI) or AWS (Bedrock). OpenCode can be configured to use these resources directly, providing a powerful, cost-free backend for your agents.

The key takeaway here is that OpenCode’s extensive integration capabilities—often supporting dozens of providers via their SDKs—make it a powerful hub for consolidating various free and promotional AI resources. This is where OpenCode truly shines, offering far greater flexibility and customization than many single-provider solutions.

Why OpenCode is a Game Changer for AI Agent Orchestration

My journey into this setup began out of necessity, driven by a desire to leverage the rapidly evolving AI landscape without breaking the bank. What I discovered was more than just a cost-saving trick; it was a fundamental shift in how I interact with AI as a developer.

Maximizing model strengths

Every AI model has its unique strengths and weaknesses. Gemini’s massive context window is superb for understanding, while a faster, smaller model might be better for quick code generation. A reasoning-focused model excels at architectural decisions. OpenCode allows you to strategically deploy these models like specialized tools in your workshop. You wouldn’t use a sledgehammer to drive a small nail, and similarly, you shouldn’t use an expensive, high-context LLM for a simple code formatting task.

Significant cost reduction

By offloading context-heavy tasks and routine code generation to free tiers and daily allowances, I drastically reduce the token consumption of my main OpenCode agent, which typically runs on a more powerful, paid model like Claude Sonnet 4. This approach translates to substantial cost savings, allowing me to maximize the value of both free and paid resources.

Enhanced workflow and productivity

Instead of juggling multiple CLIs, my entire AI interaction happens within a single, unified environment. OpenCode’s main agent intelligently delegates tasks to the most appropriate sub-agent, streamlining the entire development process. This not only makes the workflow smoother but also significantly boosts productivity, as I spend less time context-switching and more time building.

Conclusion: Maximizing Your AI Coding Agent Potential on a Budget

Setting up AI coding agents doesn’t have to be an expensive endeavor. By adopting a smart, orchestrated approach with tools like OpenCode, developers can harness a vast array of free and low-cost AI resources, turning them into powerful, specialized sub-agents. This strategy allows you to:

- Leverage diverse AI capabilities: From deep code understanding to rapid implementation and robust reasoning, choose the right AI for every task.

- Drastically cut costs: Optimize your token usage by offloading work to free tiers and daily allowances.

- Streamline your workflow: Integrate all your AI tools into a single, cohesive environment, enhancing productivity and focus.

This method isn’t just about saving money; it’s about building a more intelligent, adaptable, and efficient development workflow. I encourage you to experiment with OpenCode and integrate your favorite free CLI tools and API providers. The flexibility and power you gain by orchestrating these diverse AI agents are truly transformative.

If you’ve experimented with similar setups or discovered other clever ways to integrate AI coding agents, I’d love to hear your insights in the comments below! Let’s continue to learn and build better, smarter development practices together.