Hey there, fellow automation engineers

If you’re running LobeHub, OpenClaw, or Claude Code alongside n8n and you’ve ever wished your AI agent could actually build and fix workflows on its own – not just read and run them – this post is for you.

I’ll walk you through the entire journey: discovering the limitation → researching alternatives → building a custom solution → deploying it successfully on a Raspberry Pi 4 running Docker.

By the end, your AI agent won’t just be an assistant. It’ll be a Senior n8n Engineer.

The Problem: The Default n8n MCP Server Is Read-Only

If you’ve been using czlonkowski/n8n-mcp – one of the most popular MCP Server implementations for n8n – you’ve probably hit the same wall I did. It only supports reading and executing workflows.

Here’s a capability comparison that makes the gap painfully clear:

| Operation | n8n-mcp (czlonkowski) | What You Actually Need |

|---|---|---|

| List workflows | ✅ | ✅ |

| View workflow details | ✅ | ✅ |

| Execute workflow | ✅ | ✅ |

| Activate / Deactivate | ✅ | ✅ |

| Create new workflow | ❌ | ✅ |

| Update workflow | ❌ | ✅ |

| Delete workflow | ❌ | ✅ |

| Test Webhook | ❌ | ✅ |

| View execution history (Debug) | ❌ | ✅ |

In other words: your AI has hands, but they’re tied behind its back. It can look at workflows and press “Run,” but it can’t create or modify anything. Every change still requires you to open a browser, navigate to the n8n Editor, and drag-and-drop nodes manually.

This becomes a serious bottleneck when you’re testing complex integration flows – like Yandex Mail callbacks or Microsoft Outlook webhooks – where you constantly need to create webhooks, tweak nodes, trigger tests, and debug errors. Doing it manually takes hours.

The Solution: What Is n8n-custom-mcp?

n8n-custom-mcp is a custom-built MCP Server that runs as a Docker container and exposes 12 tools for your AI agent (on LobeHub, OpenClaw, or Claude Code) to manage n8n comprehensively – via the Model Context Protocol (MCP).

Full Tool Reference (12 MCP Tools)

| Category | Tool | Description |

|---|---|---|

| Workflow CRUD | list_workflows | List all workflows |

get_workflow | View full workflow JSON (nodes, connections) | |

create_workflow | Create a new workflow from JSON | |

update_workflow | Update a workflow (rename, add/remove nodes…) | |

delete_workflow | Delete a workflow | |

activate_workflow | Activate or deactivate a workflow | |

| Execution | execute_workflow | Run a workflow by ID |

trigger_webhook | Call a webhook (supports test mode!) | |

| Monitoring | list_executions | View execution history, filter by status |

get_execution | Debug individual executions (input/output data, errors) | |

| Discovery | list_node_types | List all node types installed in your n8n instance |

The bottom line: Your AI agent goes from “can only read and run” to being a self-sufficient n8n engineer – it creates workflows, tests them, reads errors, fixes bugs, and re-tests. All you do is give instructions in plain language.

Architecture Overview: How LobeHub Talks to n8n via MCP

Understanding the data flow is key to troubleshooting and extending this setup. Here’s the full architecture:

┌─────────────────────────────────────────────────────────┐

│ LobeHub / OpenClaw │

│ (AI Agent + System Prompt) │

│ │

│ ┌─────────────────┐ ┌───────────────────────────┐ │

│ │ n8n-skills │ │ MCP Client │ │

│ │ (Knowledge) │ │ → http://host:3000/mcp │ │

│ │ System Prompt │ └──────────┬────────────────┘ │

│ └─────────────────┘ │ │

└─────────────────────────────────────┼───────────────────┘

│ HTTP (Streamable)

┌─────────────────▼───────────────────┐

│ n8n-custom-mcp (Docker) │

│ │

│ supergateway ← stdio → Node.js │

│ :3000/mcp │

│ │

│ 12 Tools (CRUD + Webhook + Debug) │

└─────────────────┬───────────────────┘

│ REST API (internal Docker network)

┌─────────────────▼───────────────────┐

│ n8n Instance (Docker) │

│ :5678 │

│ │

│ ┌──────┐ ┌───────┐ ┌──────────┐ │

│ │ PgSQL│ │ Redis │ │ Worker │ │

│ └──────┘ └───────┘ └──────────┘ │

└─────────────────────────────────────┘Example Workflow – How the AI Agent Operates

- You message the AI on LobeHub: “Create a webhook workflow to receive emails from Outlook.”

- The AI calls

create_workflowvia MCP → n8n creates a new workflow. - The AI calls

activate_workflow→ The workflow goes live. - The AI calls

trigger_webhook(test mode) → Sends a simulated request. - The AI calls

list_executions→ Checks the result. - If it fails → The AI calls

get_execution→ Reads the error →update_workflow→ Fixes it automatically. - The loop repeats until the workflow runs perfectly.

Zero manual intervention. Zero browser tabs.

Step-by-Step Installation Guide (Docker)

Prerequisites

- Docker & Docker Compose installed

- An n8n instance (running or to be started with this compose stack)

- An n8n API Key (generate one in n8n → Settings → API → Create API Key)

Step 1: Set Up Your Directory Structure

n8n-stack/

├── docker-compose.yml

├── .env ← Contains your secrets

├── n8n-custom-mcp/ ← Cloned from GitHub

│ ├── package.json

│ ├── tsconfig.json

│ ├── Dockerfile

│ └── src/

│ └── index.ts ← Core logic (12 tools)

├── db_data/

├── redis_data/

└── n8n_data/Step 2: Clone the Repository

cd ~/n8n-stack

git clone https://github.com/duynghien/n8n-custom-mcp.gitStep 3: Create Your .env File

# .env

POSTGRES_USER=n8n

POSTGRES_PASSWORD=your_pg_password_here

POSTGRES_DB=n8n

N8N_ENCRYPTION_KEY=your_encryption_key_here

N8N_HOST=your-domain.com

WEBHOOK_URL=https://your-domain.com/

# Generate your API Key at: n8n → Settings → API → Create API Key

N8N_API_KEY=your_n8n_api_key_hereSecurity Warning: Never hard-code API keys in your source code. Always use environment variables or

.envfiles, and never commit.envto version control. Protect your keys.

Step 4: Configure docker-compose.yml

services:

# 1. PostgreSQL Database

postgres:

image: postgres:16

restart: always

environment:

- POSTGRES_USER=${POSTGRES_USER}

- POSTGRES_PASSWORD=${POSTGRES_PASSWORD}

- POSTGRES_DB=${POSTGRES_DB}

volumes:

- ./db_data:/var/lib/postgresql/data

healthcheck:

test: ["CMD-SHELL", "pg_isready -h localhost -U ${POSTGRES_USER} -d ${POSTGRES_DB}"]

interval: 5s

timeout: 5s

retries: 10

# 2. Redis (Queue Manager)

redis:

image: redis:alpine

restart: always

volumes:

- ./redis_data:/data

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 5s

timeout: 5s

retries: 10

# 3. n8n Main Instance

n8n:

image: n8nio/n8n:latest

restart: always

ports:

- "5678:5678"

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgres

- DB_POSTGRESDB_PORT=5432

- DB_POSTGRESDB_DATABASE=${POSTGRES_DB}

- DB_POSTGRESDB_USER=${POSTGRES_USER}

- DB_POSTGRESDB_PASSWORD=${POSTGRES_PASSWORD}

- N8N_ENCRYPTION_KEY=${N8N_ENCRYPTION_KEY}

- EXECUTIONS_MODE=queue

- QUEUE_BULL_REDIS_HOST=redis

- QUEUE_BULL_REDIS_PORT=6379

- WEBHOOK_URL=${WEBHOOK_URL}

- N8N_HOST=${N8N_HOST}

- N8N_SECURE_COOKIE=false

depends_on:

postgres:

condition: service_healthy

redis:

condition: service_healthy

volumes:

- ./n8n_data:/home/node/.n8n

# 4. n8n Worker (handles heavy executions)

n8n-worker:

image: n8nio/n8n:latest

restart: always

command: worker

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgres

- DB_POSTGRESDB_PORT=5432

- DB_POSTGRESDB_DATABASE=${POSTGRES_DB}

- DB_POSTGRESDB_USER=${POSTGRES_USER}

- DB_POSTGRESDB_PASSWORD=${POSTGRES_PASSWORD}

- N8N_ENCRYPTION_KEY=${N8N_ENCRYPTION_KEY}

- EXECUTIONS_MODE=queue

- QUEUE_BULL_REDIS_HOST=redis

- QUEUE_BULL_REDIS_PORT=6379

- N8N_HOST=${N8N_HOST}

- N8N_SECURE_COOKIE=false

depends_on:

n8n:

condition: service_started

volumes:

- ./n8n_data:/home/node/.n8n

# 5. n8n-custom-mcp (The Power Bridge)

n8n-mcp:

build:

context: ./n8n-custom-mcp

restart: always

ports:

- "3000:3000"

environment:

- N8N_HOST=http://n8n:5678

- N8N_API_KEY=${N8N_API_KEY}

depends_on:

n8n:

condition: service_started

command: >

--stdio "node dist/index.js"

--port 3000

--outputTransport streamableHttp

--streamableHttpPath /mcp

--corsStep 5: Build & Run

docker compose up -d --buildVerify all containers are running:

docker compose psExpected output:

NAME STATUS

postgres Up (healthy)

redis Up (healthy)

n8n Up

n8n-worker Up

n8n-mcp UpStep 6: Connect to LobeHub / OpenClaw

In your MCP Plugin configuration:

- Type: MCP (Streamable HTTP)

- URL:

http://<your-server-ip>:3000/mcp - Example:

http://192.168.1.100:3000/mcp

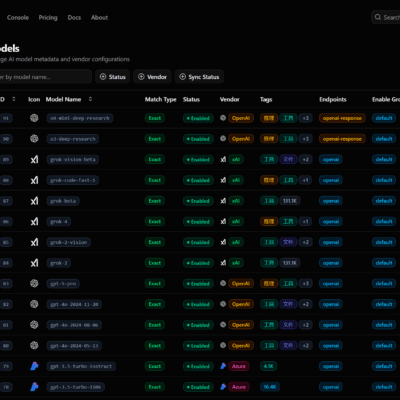

Once connected, you’ll see all 12 tools appear in your Agent’s tool panel. You’re ready to go.

Upgrade Your AI Agent’s Brain with n8n-skills (Prompt Engineering)

At this point your agent has hands (MCP tools). Now let’s give it an expert brain.

The czlonkowski/n8n-skills repository provides 7 deep-knowledge modules about n8n. These are not software to install – they are structured knowledge documents you inject into your Agent’s System Prompt.

How to Set It Up

1. Clone the repository:

git clone https://github.com/czlonkowski/n8n-skills.git2. Open the 3 most critical SKILL.md files:

n8n-skills/skills/

├── n8n-mcp-tools-expert/SKILL.md ← Priority 1: Teaches AI to use MCP tools correctly

├── n8n-workflow-patterns/SKILL.md ← Priority 2: 5 standard workflow patterns

└── n8n-expression-syntax/SKILL.md ← Priority 3: n8n expression syntax reference3. Copy the content and paste it into your Agent’s System Prompt on LobeHub or OpenClaw.

Recommended System Prompt Template

# Role

You are an expert n8n workflow automation engineer. You have full access

to an n8n instance through an MCP Server with complete CRUD, Testing,

and Debugging capabilities.

# Critical Rules

## Webhook Data Access

- Webhook data is ALWAYS nested under `$json.body`

- Correct: {{ $json.body.email }}

- Wrong: {{ $json.email }}

## Workflow Patterns

1. Webhook Processing: Webhook → Process → Respond to Webhook

2. HTTP API: Trigger → HTTP Request → Transform → Output

3. Database: Trigger → Query → Transform → Store

4. AI Agent: Trigger → AI Agent (tools) → Output

5. Scheduled: Schedule → Fetch → Process → Report

## Code Node

- Required return format: [{json: {...}}]

- Use JavaScript for 95% of use cases

- Python in n8n does NOT have external libraries

## Standard Operating Procedure

1. Receive request → Select the appropriate pattern

2. create_workflow → build the workflow

3. activate_workflow → activate it

4. trigger_webhook (test_mode: true) → run a test

5. list_executions → check results

6. get_execution → if error, read detailed error info

7. update_workflow → fix the issue

8. Repeat steps 4–7 until everything worksBefore vs. After: The Impact of n8n-skills

| Scenario | Without n8n-skills | With n8n-skills |

|---|---|---|

| Creating a webhook workflow | AI writes $json.email → undefined error | AI writes $json.body.email → works correctly |

| Code node return data | AI returns {email: "..."} → format error | AI returns [{json: {email: "..."}}] → correct |

| Choosing a workflow pattern | AI guesses randomly → missing nodes | AI selects 1 of 5 known patterns → complete |

| Encountering a validation error | AI gives up, asks you for help | AI reads the error → fixes it → re-runs automatically |

Real-World Demo: Building & Debugging an Outlook Webhook Workflow

Here’s a real scenario that ran successfully on my system – start to finish, with zero manual intervention from me.

Step 1: Give the AI a Task

You: “Create a webhook workflow to receive a callback from Microsoft Outlook. When it receives a POST request, extract the email subject and sender, and save them in a Set node.”

Step 2: The AI Builds the Workflow Automatically

The AI calls create_workflow with the following payload:

{

"name": "Outlook Webhook Handler",

"nodes": [

{

"name": "Webhook",

"type": "n8n-nodes-base.webhook",

"parameters": { "path": "outlook-callback", "httpMethod": "POST" },

"position": [250, 300]

},

{

"name": "Extract Data",

"type": "n8n-nodes-base.set",

"parameters": {

"values": {

"string": [

{ "name": "subject", "value": "={{ $json.body.subject }}" },

{ "name": "sender", "value": "={{ $json.body.sender }}" }

]

}

},

"position": [450, 300]

}

],

"connections": {

"Webhook": { "main": [[{ "node": "Extract Data", "type": "main", "index": 0 }]] }

}

}Then the AI calls activate_workflow → The workflow is now live.

Step 3: The AI Tests It

The AI calls trigger_webhook:

{

"webhook_path": "outlook-callback",

"method": "POST",

"test_mode": true,

"body": {

"subject": "Meeting Tomorrow",

"sender": "[email protected]"

}

}Step 4: The AI Verifies the Result

The AI calls list_executions → Finds the latest execution.

The AI calls get_execution → Reads the output:

{

"status": "success",

"data": {

"Extract Data": {

"subject": "Meeting Tomorrow",

"sender": "[email protected]"

}

}

}AI response: “Workflow executed successfully! Subject and sender have been extracted correctly.”

What If There’s an Error? (Self-Healing Loop)

Suppose the AI makes a mistake and writes $json.subject instead of $json.body.subject:

get_executionreturnssubject: undefined- The AI recognizes the error, calls

update_workflow→ fixes the expression to$json.body.subject trigger_webhook(attempt 2) → Success- The entire debug cycle is fully automated – no human intervention required.

What You’ll Achieve After This Guide

- 12 MCP Tools – Full CRUD, Testing, and Debugging for n8n workflows

- Docker-Ready Deployment – One command:

docker compose up -d --build - A Truly Intelligent AI Agent – Creates, tests, debugs, and fixes workflows autonomously

- Production-Tested – Successfully running on a Raspberry Pi 4

Resources & Repositories

| Resource | Link |

|---|---|

| n8n-custom-mcp (featured in this post) | github.com/duynghien/n8n-custom-mcp |

| n8n-mcp (by czlonkowski) | github.com/czlonkowski/n8n-mcp |

| n8n-skills (knowledge modules) | github.com/czlonkowski/n8n-skills |

What’s Next? Future Roadmap

Here are some ideas on the horizon:

search_templates– Search community workflow templates directly from the agentget_credentials– Manage n8n credentials via MCP- SSE transport support – In addition to Streamable HTTP

- Specialized system prompts – Tailored for CRM, email marketing, DevOps, and other verticals